Free Microsoft AI-900 Practice Test Questions MCQs

Stop wondering if you're ready. Our Microsoft AI-900 practice test is designed to identify your exact knowledge gaps. Validate your skills with Microsoft Azure AI Fundamentals questions that mirror the real exam's format and difficulty. Build a personalized study plan based on your free AI-900 exam questions mcqs performance, focusing your effort where it matters most.

Targeted practice like this helps candidates feel significantly more prepared for Microsoft Azure AI Fundamentals exam day.

23220+ already prepared

Updated On : 3-Mar-2026322 Questions

Microsoft Azure AI Fundamentals

4.9/5.0

Topic 5: Describe features of conversational AI workloads on Azure

Capturing text from images is an example of which type of Al capability?

A. text analysis

B. optical character recognition (OCR)

C. image description

D. object detection

Explanation:

This question asks about an AI capability that extracts text from images. Optical character recognition (OCR) is specifically designed to detect and convert text in images, scanned documents, or other visual media into machine-readable text, making it the correct choice.

Correct Option:

B. Optical character recognition (OCR):

OCR is the technology used to recognize and extract text characters from images, scanned documents, or handwritten sources, converting them into editable and searchable data.

Incorrect Options:

A. Text analysis:

This involves processing and analyzing text data to extract insights, sentiment, or structure but does not involve capturing text from images.

C. Image description:

This uses computer vision to generate descriptive captions for images but does not specifically extract textual content from within them.

D. Object detection:

This identifies and locates objects (e.g., cars, people) within images, but it does not recognize or extract text characters.

Reference:

Microsoft Learn: "What is optical character recognition?" in AI-900 documentation describes OCR as the capability to extract text from images and documents.

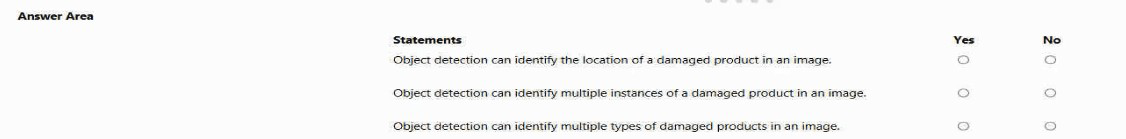

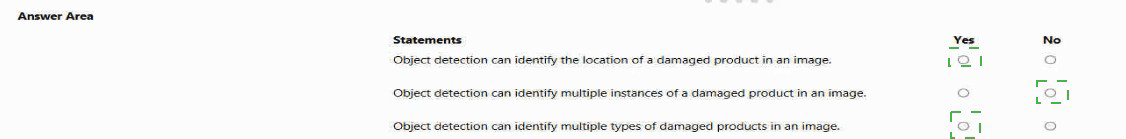

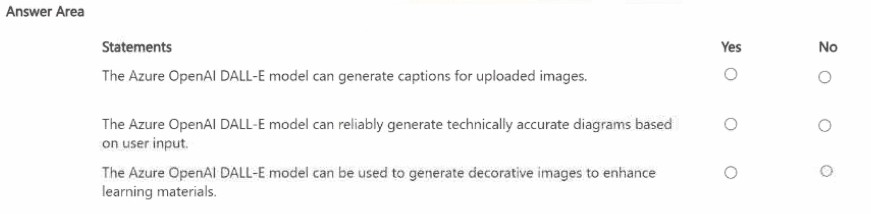

For each of the following statements, select Yes If the statement is true. Otherwise, select No.

NOTE: Each correct selection is worth one point.

Explanation:

The question tests understanding of object detection capabilities in computer vision. Object detection identifies and locates objects within an image, including multiple objects of the same or different classes, and can detect each object’s location.

Correct Option:

Row 1 – Yes:

Object detection locates specific objects within an image, so it can find the location of a damaged product.

Row 2 – Yes:

It can detect multiple instances of the same object type in a single image.

Row 3 – Yes:

It can identify and locate different types of objects in one image, such as multiple categories of damaged products.

Incorrect Option:

“No” for any row would be incorrect, because all described capabilities—locating an object, detecting multiple instances, and detecting multiple types—are core functions of object detection.

Reference:

Microsoft Learn AI-900 training materials on computer vision state that object detection both classifies objects and provides bounding box coordinates for each, supporting multiple objects and multiple classes in a single image.

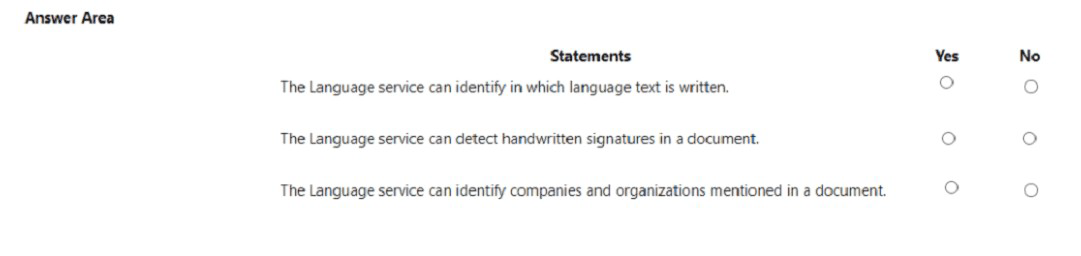

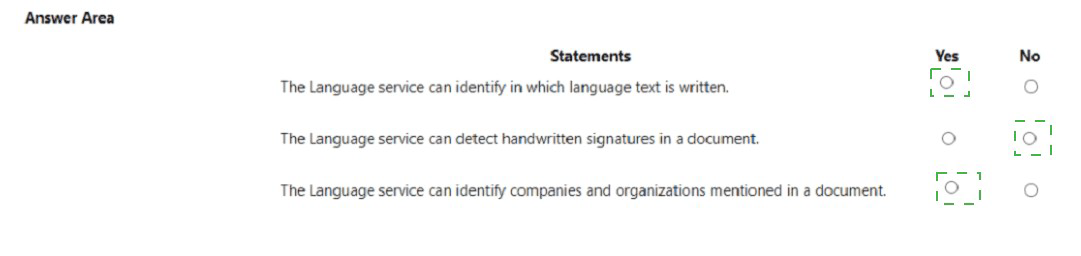

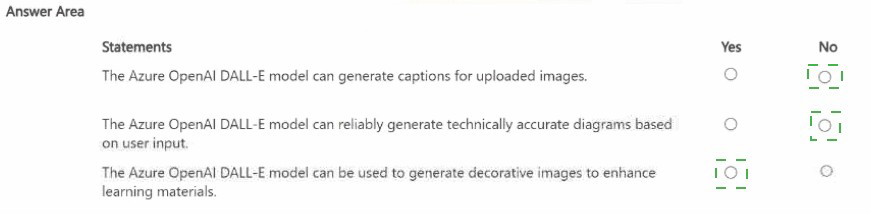

For each of the following statements, select Yes if the statement is true. Otherwise, select No.

NOTE; Each correct selection is worth one point.

Explanation:

This question assesses knowledge of Azure AI Language service capabilities. The Language service supports text analytics features such as language detection, entity recognition (including organizations), but it does not process handwritten content visually.

Correct Option:

Row 1 – Yes:

Language detection is a core feature; it identifies the language of the provided text.

Row 2 – No:

Detecting handwritten signatures is a computer vision/task, not a capability of the Language service, which works only with digital text.

Row 3 – Yes:

Named entity recognition can identify companies, organizations, people, locations, etc., in a document.

Incorrect Option:

Row 1 – No:

Incorrect; language detection is a primary feature of the service.

Row 2 – Yes:

Incorrect; handwritten analysis requires OCR or Form Recognizer, not the Language service.

Row 3 – No:

Incorrect; identifying organizations is part of entity recognition in the Language service.

Reference:

Microsoft Learn documentation for Azure AI Language lists language detection and named entity recognition (including organization detection) as key features, while handwritten processing is part of Azure Form Recognizer or Vision services.

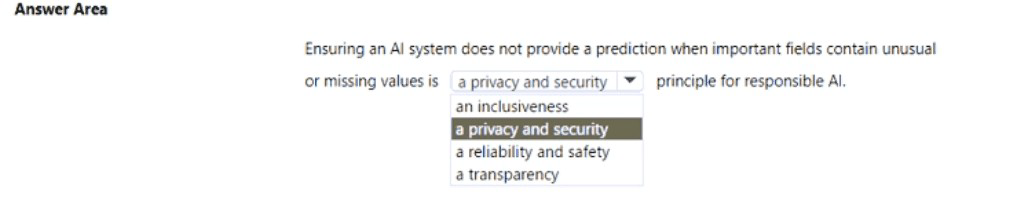

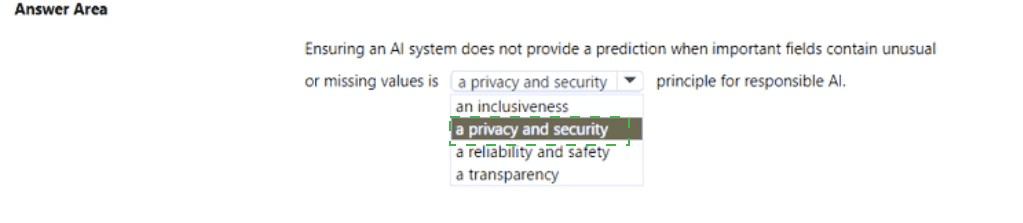

Select the answer that correctly completes the sentence

Explanation:

This question is about responsible AI principles. Preventing predictions when data is incomplete or anomalous relates to ensuring the system performs safely and reliably, avoiding harmful or erroneous outputs due to poor-quality input.

Correct Option:

Reliability and safety:

This principle focuses on operating AI systems consistently under all conditions, including handling missing or unusual data gracefully to prevent unsafe or unreliable predictions.

Incorrect Option:

Inclusiveness:

Aims to address bias and ensure AI benefits all people, not directly about handling data quality for predictions.

Privacy and security:

Protects data and systems from unauthorized access, not specifically about validating input data before making predictions.

Transparency:

Involves making AI systems understandable to users, not directly about withholding predictions due to data issues.

Reference:

Microsoft's Responsible AI principles define reliability and safety as ensuring AI systems behave as expected, even with edge cases or missing data, to avoid harm.

Which Azure Machine Learning capability should you use to quickly build and deploy a predictive model without extensive coding?

A. ML pipelines

B. Copilot

C. DALL-E

D. automated machine learning (automated ML)

Explanation:

This question asks about an Azure Machine Learning tool for creating and deploying predictive models with minimal coding effort. Automated ML automates the model selection, training, and tuning process, enabling rapid development without deep programming expertise.

Correct Option:

D. Automated machine learning (automated ML):

Automated ML automates the time‑consuming, iterative tasks of model development, such as algorithm selection, hyperparameter tuning, and feature engineering, allowing users to build high‑quality models quickly through a visual interface or minimal code.

Incorrect Option:

A. ML pipelines:

These are workflows for orchestrating repeatable ML steps, but they still require significant coding and design effort.

B. Copilot:

While helpful for code suggestions, it is a general AI‑pair programmer, not a dedicated low‑code model‑building tool in Azure ML.

C. DALL‑E:

This is an AI model for generating images from text, unrelated to building predictive models in Azure Machine Learning.

Reference:

Microsoft Learn: “What is automated machine learning?” states that automated ML in Azure Machine Learning enables you to build ML models with high scale, efficiency, and productivity without extensive programming knowledge.

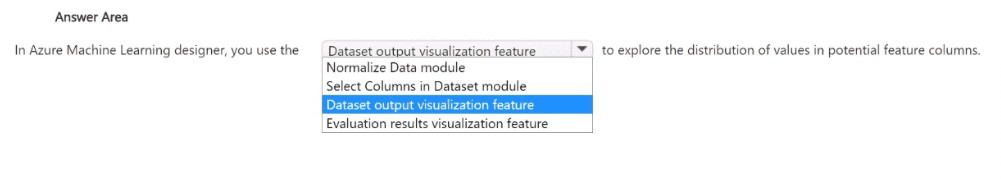

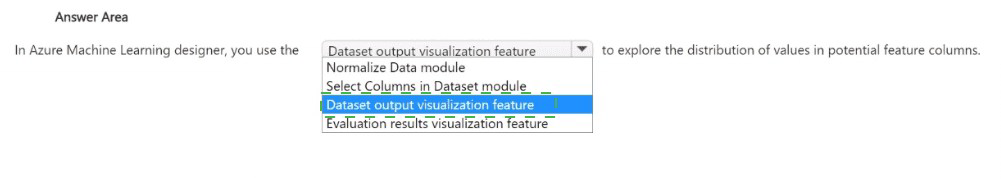

Select the answer that correctly completes the sentence.

Explanation:

The question asks which tool in Azure Machine Learning designer helps visually explore data distributions in feature columns. Dataset output visualization allows users to generate charts and statistics directly from a dataset module, aiding in exploratory data analysis.

Correct Option:

Dataset output visualization feature:

This feature provides a visual interface to explore the dataset, including histograms, scatter plots, and summary statistics, which helps analyze value distributions and identify patterns or anomalies in feature columns.

Incorrect Option:

Normalize Data module:

This is a transformation module that scales numerical data but does not provide visualization capabilities.

Select Columns in Dataset module:

This module is used to choose specific columns from the dataset, not to visualize data distributions.

Evaluation results visualization feature:

This is used to visualize model performance metrics after training, not for exploring raw dataset distributions.

Reference:

Microsoft Learn documentation for Azure Machine Learning designer notes that you can right‑click a dataset module and select Visualize to explore data distributions, statistics, and charts for each column.

You need to convert handwritten notes into digital text.

Which type of computer vision should you use?

A. optical character recognition (OCR)

B. object detection

C. image classification

D. facial detection

Explanation:

This question asks which computer vision capability extracts text from handwritten notes. OCR is specifically designed to recognize and convert both printed and handwritten text in images into machine-readable text.

Correct Option:

A. Optical character recognition (OCR): OCR technology detects and extracts text from images, including handwritten content, and converts it into editable and searchable digital text. Azure's Read API and Form Recognizer support handwriting recognition.

Incorrect Option:

B. Object detection: Identifies and locates objects in images, but does not extract text.

C. Image classification: Categorizes an entire image into a class/label, not for transcribing text.

D. Facial detection: Recognizes or analyzes human faces, not handwritten text.

Reference:

Microsoft Learn: “What is optical character recognition?” explains that OCR extracts text from images and documents, including handwritten text, using services like Azure Computer Vision Read API.

For each of the following statements, select Yes if the statement is true. Otherwise, select

No.

NOTE; Each correct selection is worth one point.

Explanation:

This question assesses understanding of Azure OpenAI's DALL‑E model capabilities. DALL‑E is a generative AI model that creates original images from text prompts; it does not caption existing images or guarantee technical accuracy, but is suitable for creative/illustrative content.

Correct Option:

Row 1 – No:

DALL‑E generates images from text; it does not analyze uploaded images to produce captions (this is done by Azure AI Vision's describe image feature).

Row 2 – No:

DALL‑E is not designed for reliable technical diagrams; its outputs are artistic/creative and may not be factually or technically precise.

Row 3 – Yes:

DALL‑E excels at generating decorative, stylistic, or illustrative images based on text prompts, which can enhance learning materials visually.

Incorrect Option:

Row 1 – Yes:

Incorrect; image captioning is a computer vision task, not a generative image model's function.

Row 2 – Yes:

Incorrect; DALL‑E does not guarantee accuracy for technical schematics or diagrams.

Row 3 – No:

Incorrect; generating decorative images is a core and valid use case for DALL‑E.

Reference:

Microsoft Learn: “What is Azure OpenAI Service?” describes DALL‑E as a model for generating novel images from text descriptions, ideal for creative and illustrative purposes, not for image analysis or technically precise outputs.

You deploy the Azure OpenAI service to generate images.

You need to ensure that the service provides the highest level of protection against harmful content.

What should you do?

A. Configure the Content filters settings.

B. Customize a large language model (LLM).

C. Configure the system prompt

D. Change the model used by the Azure OpenAI service.

Explanation:

Azure OpenAI includes built-in content filtering to detect and block harmful or inappropriate outputs. Configuring these filters is the direct method to enforce safety and protection when generating images.

Correct Option:

A. Configure the Content filters settings:

Azure OpenAI provides configurable content filters that screen prompts and generated outputs for harmful categories (e.g., hate, violence, sexual content). Adjusting these settings offers the highest level of protection.

Incorrect Option:

B. Customize a large language model (LLM):

Customization fine‑tunes model behavior but does not directly enforce content filtering; safety relies on built‑in filters.

C. Configure the system prompt:

While prompts can guide model behavior, they are not a reliable safety mechanism against harmful content compared to dedicated filters.

D. Change the model used by the Azure OpenAI service:

Different models may have varying default behaviors, but content filtering is a separate, configurable safety layer applied across models.

Reference:

Microsoft Learn: “Content filtering” in Azure OpenAI documentation explains that content filters are designed to mitigate harmful outputs and can be configured via the Azure OpenAI Studio or API to set severity levels for blocked categories.

Which Azure Al Document Intelligence prebuilt model should you use to extract parties and jurisdictions from a legal document?

A. contract

B. layout

C. general document

D. read

Explanation:

Azure AI Document Intelligence offers prebuilt models tailored for specific document types. Legal documents often contain structured sections like parties, obligations, and jurisdictions, which require specialized extraction.

Correct Option:

A. Contract:

The prebuilt Contract model is designed to analyze legal contracts and extract key information such as parties, jurisdictions, contract terms, payment details, and obligations with high accuracy.

Incorrect Option:

B. Layout:

Extracts text, tables, structure, and selection marks from documents but is not optimized for legal‑specific fields like parties and jurisdictions.

C. General document:

Extracts common fields (dates, addresses, amounts) from various documents but lacks the legal‑aware schema of the Contract model.

D. Read:

Primarily for OCR and text extraction, not for structured legal entity analysis

Reference:

Microsoft Learn: “What is Azure AI Document Intelligence?” lists the prebuilt Contract model as the solution for analyzing legal agreements to extract parties, jurisdictions, contract amounts, and other legal‑specific entities.

| Page 1 out of 33 Pages |

Microsoft Azure AI Fundamentals Practice Exam Questions

AI-900 Microsoft Azure AI Fundamentals: Your Smart Start into AI

What You will Learn in the AI-900 Exam

The AI-900 exam validates your understanding of core artificial intelligence concepts and how Microsoft Azure brings them to life. Topics include AI workloads, machine learning fundamentals, computer vision, natural language processing, and conversational AI. No programming background is required—only clear conceptual knowledge and practical understanding.

Proven Exam Tips to Boost Your Score

Focus on understanding real-world scenarios rather than memorizing definitions. Most questions are case-based, so think from a business perspective and choose the Azure AI service that best fits the problem. Staying calm and reading questions carefully makes a big difference.

Costly Mistakes to Avoid on Exam Day

Many candidates confuse machine learning with general AI services or overlook responsible AI principles. Another common mistake is relying only on theory without practicing questions similar to the exam format.

Smart Study Strategy That Works

Begin with Microsoft Learn resources and revise concepts consistently. Reinforce learning with practical examples and diagrams. To truly test your readiness, practice with reliable exam-style questions. MSmcqs.com AI-900 practice questions are highly effective in identifying weak areas and building exam confidence.

Practice Like the Real Exam

Attempting a full-length Microsoft Azure AI Fundamentals practice test helps you understand question patterns, difficulty level, and time management. Regular practice significantly improves accuracy and reduces exam stress.

Real Success Story from a Candidate

I had no prior AI background, but the structured study approach and full-length practice tests helped me pass AI-900 on my first attempt. The questions on msmcqs.com were very close to the real exam and boosted my confidence immensely.

— Daniel Roberts, IT Support Specialist