Topic 5: Describe features of conversational AI workloads on Azure

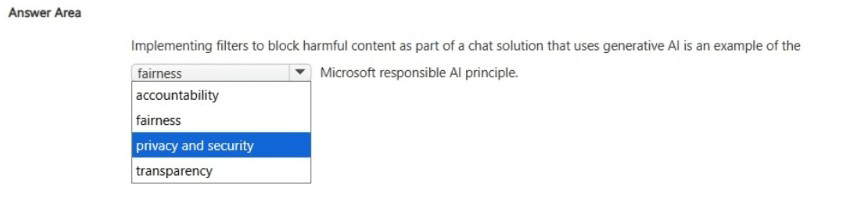

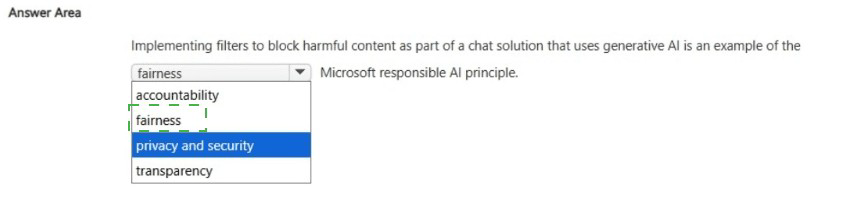

Select the answer that correctly completes the sentence.

Explanation:

This question asks which responsible AI principle aligns with blocking harmful content in generative AI chats. Filtering harmful content is a safety measure that ensures AI systems operate reliably and cause no harm—core aspects of reliability and safety.

Correct Option:

Reliability and safety:

This principle focuses on ensuring AI systems perform safely, reliably, and consistently, including mitigating risks like harmful or inappropriate outputs through protective measures such as content filters.

Incorrect Option:

Fairness:

Addresses bias and ensures equitable treatment across different user groups, not directly about content safety.

Accountability:

Involves human oversight and clear responsibility for AI systems, but not specifically implementing content filters.

Privacy and security:

Protects data and prevents unauthorized access, not primarily about filtering harmful generated content.

Transparency:

Focuses on making AI decisions understandable to users, not on content moderation.

Reference:

Microsoft's Responsible AI principles define reliability and safety as ensuring AI systems operate as intended under all conditions and include safeguards against harmful content. Content filters are a direct implementation of this principle.

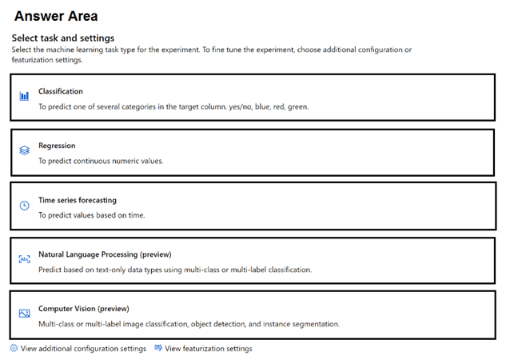

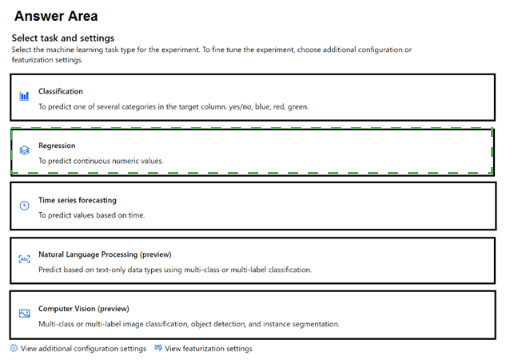

You have a large dataset that contains motor vehicle sales data.

You need to train an automated machine learning (automated ML) model to predict vehicle sale values based on the type of vehicle.

Which task should you select? To answer, select the appropriate task in the answer area.

NOTE: Each correct selection is worth one point.

Explanation:

This question asks which automated ML task to use when predicting a numeric value (vehicle sale price) based on input features like vehicle type. Regression is the machine learning task for predicting continuous numeric outcomes.

Correct Option:

Regression:

In automated ML, the regression task is used when the target variable is a continuous numeric value—here, the sale price of a vehicle. It models the relationship between input features (e.g., vehicle type, mileage, age) and that numeric outcome.

Incorrect Option:

Classification:

Used when predicting categories or labels, not continuous numbers.

Time series forecasting:

Used for predictions based on temporal patterns, not general feature-based numeric prediction.

Natural Language Processing:

For text-based prediction tasks, not structured numeric data.

Computer Vision:

For image-based tasks, not tabular sales data.

Reference:

Microsoft Learn: “Choose automated ML task types” states that regression is the appropriate task for predicting a numeric column, such as price, quantity, or score, from structured data.

You have an Azure Machine Learning model that uses clinical data to predict whether a patient has a disease.

You clean and transform the clinical data.

You need to ensure that the accuracy of the model can be proven.

What should you do next?

A. Train the model by using the clinical data.

B. Split the clinical data into Two datasets.

C. Train the model by using automated machine learning (automated ML).

D. Validate the model by using the clinical data.

Explanation:

After cleaning data, the next step to ensure model accuracy is to validate its performance on unseen data. This requires first splitting the dataset into training and validation sets before training, so the model can be evaluated fairly.

Correct Option:

B. Split the clinical data into two datasets:

This is the necessary next step to create training and testing (or validation) sets. Only by evaluating the model on separate, unseen data can you prove its accuracy and avoid overfitting.

Incorrect Option:

A. Train the model by using the clinical data:

Training without first splitting data prevents proper validation, as you cannot test on unseen data.

C. Train the model by using automated machine learning (automated ML):

Automated ML still requires a train/test split for evaluation; choosing this skips the essential data preparation step.

D. Validate the model by using the clinical data:

Validation requires a separate dataset; you cannot reliably validate on the same data used for training.

Reference:

Microsoft Learn: “Split data into training and testing sets” explains that data must be split before training to enable model validation and ensure performance accuracy can be measured on independent data.

Providing contextual information to improve the responses quality of a generative Al solution is an example of which prompt engineering technique?

A. providing examples

B. fine-tuning

C. grounding data

D. system messages

Explanation:

Prompt engineering includes techniques to guide AI model responses. Providing contextual information, such as relevant facts or documents, to improve response accuracy is known as grounding—it “grounds” the model’s answers in specific, reliable data.

Correct Option:

C. Grounding data:

This technique enriches prompts with relevant, up‑to‑date, or domain‑specific external information (e.g., documents, databases) so the model generates responses based on that provided context rather than solely on its training data.

Incorrect Option:

A. Providing examples:

This refers to few‑shot prompting, giving the model sample inputs and outputs to illustrate the desired format or reasoning.

B. Fine‑tuning:

A training process that adjusts model weights on a specific dataset, not a prompt‑engineering technique used during inference.

D. System messages:

These set the overall behavior or role for the model (e.g., “You are a helpful assistant”) but do not directly supply contextual data for a specific query.

Reference:

Microsoft Learn: “Prompt engineering techniques” describes grounding as providing external data or context within the prompt to improve relevance and accuracy of generative AI responses.

You are building an Al-based loan approval app.

You need to ensure that the app documents why a loan is approved or rejected and makes the report available to the applicant.

This is an example of which Microsoft responsible Al principle?

A. fairness

B. inclusiveness

C. transparency

D. accountability

Explanation:

Providing clear documentation of a decision (approval/rejection reasons) and making it available to the applicant helps the user understand how the AI system arrived at that outcome, which aligns with the principle of transparency.

Correct Option:

C. Transparency:

This principle focuses on making AI systems understandable and their decisions explainable to users. Documenting and sharing the rationale behind a loan decision allows applicants to comprehend the process, building trust and clarity.

Incorrect Option:

A. Fairness:

Addresses avoiding bias and ensuring equitable treatment, but does not specifically mandate explaining decisions to users.

B. Inclusiveness:

Ensures AI benefits all people regardless of ability or background; not directly about documenting decision logic.

D. Accountability:

Involves human oversight and responsibility for AI systems, but the act of documenting and sharing reasons is more closely tied to explainability (transparency).

Reference:

Microsoft's Responsible AI principles define transparency as enabling users to understand how AI systems work and why they make certain decisions, often through clear documentation and explainability features.

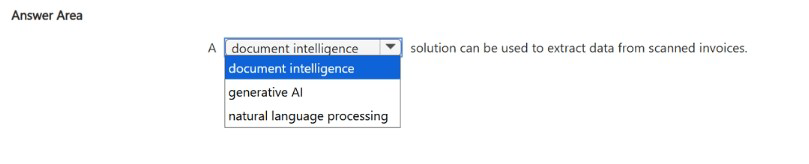

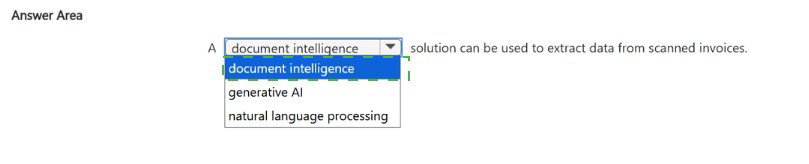

Select the answer that correctly completes the sentence.

Explanation:

The question asks which AI service extracts structured data from scanned documents like invoices. Azure AI Document Intelligence (formerly Form Recognizer) is specifically built to extract text, key-value pairs, tables, and fields from documents with prebuilt models for invoices, receipts, etc.

Correct Option:

Document intelligence:

This service uses machine learning to analyze documents (scanned or digital) and extract structured data, such as invoice numbers, dates, line items, and totals, with high accuracy and minimal manual effort.

Incorrect Option:

Generative AI:

Creates new content (text, images) but is not designed for structured data extraction from scanned invoices.

Natural language processing:

Analyzes and interprets text for insights, sentiment, or entities, but is not optimized for extracting specific fields from structured documents like invoices.

Reference:

Microsoft Learn: “What is Azure AI Document Intelligence?” describes its prebuilt invoice model that automatically extracts key information from scanned or digital invoices without manual configuration.

What should you do to reduce the number of false positives produced by a machine learning classification model?

A. Include test data in the training data.

B. Increase the number of training iterations.

C. Modify the threshold value in favor of false positives.

D. Modify the threshold value in favor of false negatives.

Question Analysis & Core Concept

The question addresses a fundamental challenge in binary classification models: the trade-off between different types of errors. A false positive is when the model incorrectly predicts a "Yes" (or positive) outcome when the true answer is "No." For example, a security model flagging a legitimate transaction as fraud, or a medical test diagnosing a healthy person with a disease.

The primary tool a data scientist has to manage this trade-off, after the model is trained, is the classification threshold. Most models don't just output a hard "Yes/No"; they output a probability (a score between 0 and 1) indicating how confident they are that an instance belongs to the positive class. The default threshold is typically 0.5. Any probability at or above 0.5 results in a "Yes" prediction; below 0.5 results in a "No."

Therefore, to reduce false positives, you must make it harder for the model to predict "Yes." You do this by raising the threshold (e.g., from 0.5 to 0.7 or 0.8). Now, the model only predicts "Yes" when it is highly confident. This will correctly reclassify some of the previous false positives as "No," thereby reducing their number. However, the unavoidable consequence is that some true positives (correct "Yes" predictions) may now fall below the new, higher bar and become false negatives. You are explicitly trading an increase in false negatives for a decrease in false positives.

Detailed Breakdown of All Options

Correct Option:

D. Modify the threshold value in favor of false negatives.

Explanation: This is the precise and correct action. By "in favor of false negatives," it means you adjust the model's decision-making process to be more tolerant of missing some true positives (leading to more false negatives) in order to achieve a higher standard of certainty before declaring a positive result. This is done by increasing the classification threshold. For instance, in a critical application like screening for a severe disease, you might set a very high threshold to ensure almost no healthy person is told they are sick (minimizing false positives and patient anxiety), even if it means some sick individuals are initially missed (increasing false negatives, requiring further tests).

Incorrect Options:

A. Include test data in the training data.

Explanation: This is a catastrophic error in machine learning practice known as data leakage. The test dataset is held back specifically to provide an unbiased estimate of how the model will perform on new, unseen data. If you use the test data to train the model, you are effectively "teaching to the test." The model's performance metrics on that test set will become artificially and deceptively high because it has already seen the answers. This does not improve the model's real-world generalization or intelligently manage the false positive/false negative balance. It creates an overfitted model that will fail in production.

B. Increase the number of training iterations.

Explanation: This refers to epochs in training algorithms like gradient descent. While adjusting iterations can impact model convergence, it is a blunt instrument for this specific problem. Too few iterations can lead to underfitting (poor performance on all metrics), while too many can lead to overfitting, where the model memorizes noise in the training data. Overfitting might appear to reduce false positives on the training set, but it will worsen the model's ability to generalize to new data, making its error rates (including false positives) unpredictable and likely worse in production. It does not provide the controlled, direct adjustment that threshold tuning does.

C. Modify the threshold value in favor of false positives.

Explanation: This is the direct opposite of what the question asks. Modifying in favor of false positives means lowering the threshold (e.g., from 0.5 to 0.3). This makes the model more trigger-happy in predicting "Yes." It will catch more true positives but will also incorrectly label many more negative instances as positive, thereby dramatically increasing the number of false positives. An example would be a spam filter set too low, which catches all spam but also sends many legitimate emails to the junk folder.

Practical Example & Reference

Example: Imagine a model that predicts if an email is spam (1) or not (0).

With a threshold of 0.5, it might flag 100 emails as spam. Upon review, 85 are actual spam (true positives) and 15 are important emails (false positives).

To reduce those 15 false positives, you raise the threshold to 0.8. Now, the model only flags emails it's very confident about. It might now flag only 70 emails as spam. Of these, 68 are actual spam (true positives) and only 2 are important emails (false positives). You have successfully reduced false positives from 15 to 2. The cost? You missed 17 spam emails that are now in the inbox (false negatives increased from 0 to 17).

Reference:

This concept is covered in the AI-900 learning path under "Model Evaluation." Microsoft documentation on model metrics (Precision, Recall, F1-score, and ROC curves) explains that the classification threshold is a key operational control. Precision (True Positives / (True Positives + False Positives)) is the metric that directly improves when you reduce false positives by raising the threshold. The learning materials emphasize that there is no single "correct" threshold; it must be chosen based on the business cost of each type of error.

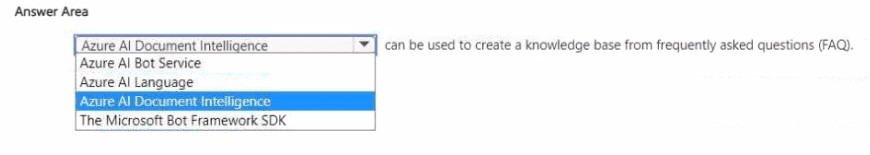

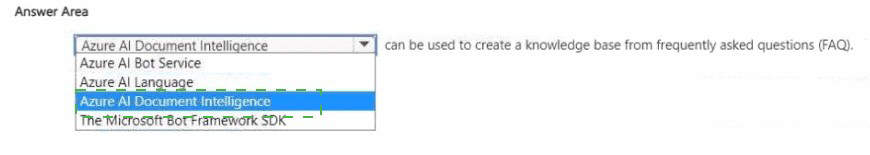

Select the answer that correctly completes the sentence.

Explanation

The question asks which Azure AI service is specifically designed to create a knowledge base from FAQ content. A knowledge base is a structured repository of information (questions and answers) used by a conversational AI (a bot) to respond to user queries. The service that specializes in ingesting FAQ documents, URLs, or manual entries to build such a knowledge base is the Azure AI Bot Service, specifically through its QnA Maker capability, which is now integrated into the Azure AI Bot Service.

Correct Option

Azure AI Bot Service:

This service includes the integrated QnA Maker feature, which is the primary tool for extracting question-and-answer pairs from structured FAQ documents (PDF, Word, Excel) or web pages to build a searchable knowledge base. This knowledge base can then be deployed as an endpoint for a bot to provide instant, accurate answers to common user questions. The process is low-code and focuses on curating content, not on programming dialog flows from scratch.

Incorrect Options

Azure AI Document Intelligence:

This service (formerly Form Recognizer) is built for document processing and data extraction from forms, invoices, receipts, and contracts. It excels at pulling structured fields (like dates, amounts, names) and tables, but it is not designed to semantically parse FAQ documents to build a conversational knowledge base. It could be used to extract text from an FAQ PDF, but the subsequent step of structuring it into a usable QnA resource belongs to the Bot Service/QnA Maker.

Azure AI Language:

This service provides advanced natural language understanding features like sentiment analysis, entity recognition, key phrase extraction, and custom text classification. While it can analyze text, it does not have a dedicated, out-of-the-box feature to ingest an FAQ and create a queryable knowledge base for a bot. Its custom question answering feature is a component that is part of the broader Azure AI Bot Service offering.

The Microsoft Bot Framework SDK:

This is a software development kit used by developers to build, test, and deploy sophisticated conversational bots with complex dialog logic. While you can program a bot that accesses a knowledge base using the SDK, the SDK itself is not the service that creates the knowledge base from an FAQ. The knowledge base creation is a separate, higher-level service (Azure AI Bot Service with QnA).

Reference:

Microsoft Learn documentation, in the "Create a QnA knowledge base" module for Azure AI Bot Service, explicitly states that you can use the service to create a knowledge base by importing existing FAQ URLs, documents, or editorial content, which forms the core of a QnA bot.

You need to identify harmful content in a generative Al solution that uses Azure OpenAI Service.

What should you use?

A. Face

B. Video Analysis

C. Language

D. Content Safety

Explanation

The question is about identifying harmful content (e.g., hate, violence, self‑harm, sexual content) within a generative AI solution built on Azure OpenAI Service. This requires a dedicated safety service that can detect and filter harmful text prompts and generated outputs. Azure AI Content Safety is the specialized Azure AI service designed exactly for this purpose—it screens text and images for harmful material using classifiers.

Correct Option

D. Content Safety:

Azure AI Content Safety offers pre‑trained models that detect harmful content across multiple categories and severity levels. It can be integrated directly into the Azure OpenAI Service workflow (via API or in Azure OpenAI Studio) to filter both user prompts and AI-generated responses, ensuring the solution aligns with responsible AI principles.

Incorrect Options

A. Face:

Part of Azure AI Vision, this service is for facial detection, recognition, and analysis (e.g., emotions, landmarks). It does not analyze text for harmful content.

B. Video Analysis:

This refers to Azure AI Video Indexer or similar services, which extract insights from video/audio (transcripts, faces, scenes). It is not designed to filter harmful textual content in a generative AI chat or text‑completion scenario.

C. Language:

While Azure AI Language has features like sentiment analysis and entity recognition, it does not include the comprehensive, multi‑category harmful content detection that Azure AI Content Safety provides. For a generative AI solution, Content Safety is the explicitly recommended and built‑in safety tool.

Reference

Microsoft Learn documentation on “Azure AI Content Safety” states it is designed to detect harmful user‑generated and AI‑generated content across text and images. The Azure OpenAI Service integration guide specifically recommends using Content Safety filters to moderate prompts and completions.

Select the answer that correctly completes the sentence.

Explanation

The scenario describes a chatbot interpreting a user’s natural language question (“will it rain?”) and responding with relevant information (a weather report). This interaction relies on understanding human language, interpreting intent, and retrieving or generating a relevant text‑based response—which is the core function of natural language processing (NLP). NLP enables machines to read, decipher, understand, and make sense of human language in a valuable way.

Correct Option

Natural language processing (NLP):

This is the AI capability that allows a chatbot to parse the user’s question, extract its meaning (intent recognition), and formulate or fetch an appropriate linguistic response. NLP encompasses subtasks like language understanding, entity recognition, and dialog management, all of which are used in conversational AI systems like chatbots.

Incorrect Options

Computer vision:

This field of AI deals with enabling machines to “see” and interpret visual information from images or videos. It is irrelevant to a text‑based conversational interaction about weather.

Prediction and forecasting:

While generating a weather report itself involves meteorological forecasting, the question is focused on the chatbot’s capability to handle the user’s query. The chatbot is not performing the weather prediction; it is using NLP to understand the request and then presenting the results from a separate forecasting system or data source.

Reference

Microsoft Learn AI‑900 learning content defines natural language processing as the branch of AI that helps computers understand, interpret, and respond to human language in a useful way, with common applications including chatbots, translation, and sentiment analysis.

| Page 2 out of 33 Pages |