Topic 1: Case Study Alpine Ski House

You are developing an app that will be published to Microsoft AppSource.

The app requires code analyzers to enforce some rules. You plan to add the analyzers to

the settings.json file.

You need to activate the analyzers for the project.

Which three code analyzers should you activate to develop the app for AppSource? Each

correct answer presents part of the solution

NOTE: Each correct selection is worth one point.

A. CodeCop

B. UlCop

C. a custom rule set

D. PerTenantExtensionCop

E. AppSourceCop

D. PerTenantExtensionCop

E. AppSourceCop

Explanation:

When developing an app for Microsoft AppSource in Business Central, specific code analyzers must be enabled to ensure compliance with Microsoft’s validation and certification requirements. These analyzers enforce best practices, UI guidelines, and technical rules that are mandatory for AppSource submission. Three specific analyzers are required for this purpose.

Correct Options:

B. UICop –

UICop enforces rules related to the user interface, such as page controls, actions, and patterns. It ensures that the extension's UI components follow Microsoft's design guidelines and do not break existing UI behavior. This is mandatory for AppSource apps to provide a consistent and accessible user experience.

D. PerTenantExtensionCop –

PerTenantExtensionCop validates that the extension is compatible with per-tenant deployment scenarios. It checks for issues like missing tooltips, breaking changes, and proper use of events. While primarily for per-tenant extensions, many of its rules are also relevant and enforced for AppSource apps.

E. AppSourceCop –

AppSourceCop is the primary analyzer for AppSource submissions. It enforces technical and commercial validation rules specific to the AppSource ecosystem. It checks for breaking changes, proper object naming, affix requirements, and other criteria required for Microsoft certification and listing.

Incorrect Options:

A. CodeCop –

CodeCop enforces general AL coding best practices such as variable naming, code complexity, and performance. While highly recommended, it is not a mandatory requirement for AppSource submission. Developers should still use it to maintain code quality.

C. a custom rule set –

Custom rule sets are not required or recognized for AppSource certification. Microsoft requires the use of their official, predefined analyzers. Custom rules cannot replace the mandatory validation performed by UICop, AppSourceCop, and PerTenantExtensionCop.

Reference:

Code Analysis for AL

You are developing an app.

You plan to publish the app to Microsoft AppSource.

You need to assign an object range for the app.

Which object range should you use?

A. custom object within the range 50000 to 59999

B. custom object within the range 50000 to 99999

C. divided by countries and use specific a country within the range 100000 to 999999

D. an object range within the range of 7000000 to 74999999 that is requested from Microsoft

E. free object within the standard range 1 to 49999

Explanation:

For Business Central apps published to Microsoft AppSource, object IDs must be assigned from a specific licensed range to avoid conflicts with other apps and Microsoft objects. Developers cannot use free or custom ranges arbitrarily. Microsoft requires a dedicated range that is requested and assigned per app to ensure global uniqueness and compliance.

Correct Option:

D. an object range within the range of 7000000 to 74999999 that is requested from Microsoft

Apps published to AppSource must use object IDs in the range 7,000,000 to 74,999,999. This range is reserved exclusively for AppSource apps. Developers must submit a request to Microsoft to obtain a unique, assigned range for their solution. This prevents ID collisions across different apps and ensures certification compliance.

Incorrect Options:

A. custom object within the range 50000 to 59999 –

This range is reserved for per-tenant customizations and development sandboxes. It is not allowed for AppSource apps because it is not globally unique and can cause conflicts when multiple apps are installed.

B. custom object within the range 50000 to 99999 –

The 50000–99999 range is designated for customer-specific and per-tenant extensions. AppSource apps cannot use this range. Microsoft certification requires objects to be in the licensed 7,000,000+ range.

C. divided by countries and use specific a country within the range 100000 to 999999 –

The 100,000–999,999 range is reserved for Microsoft's country/region-specific localization and regulatory features. It is not available for AppSource apps or partner extensions.

E. free object within the standard range 1 to 49999 –

The 1–49,999 range is reserved for Microsoft's base application objects. Partners cannot create objects in this range. Extensions must never modify or create objects in this protected range.

Reference:

Microsoft Learn:Object Ranges in Business Central

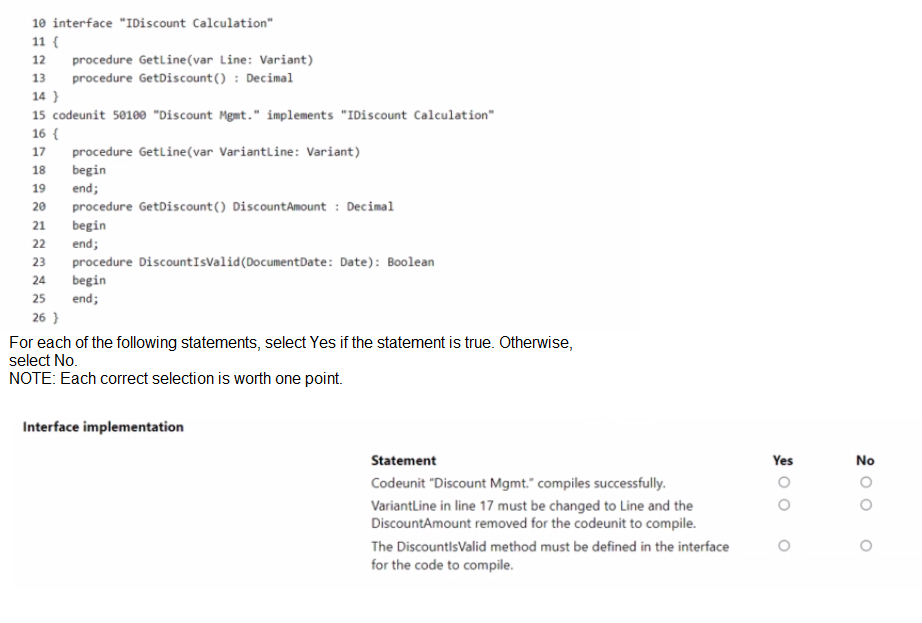

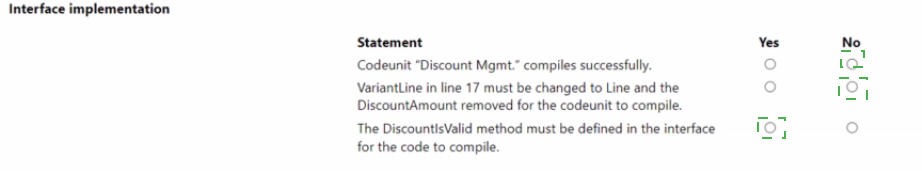

You have a per tenant extension that contains the following code.

Explanation:

This question tests your understanding of interface implementation rules in AL for Business Central. When a codeunit implements an interface, it must implement all methods defined in that interface with exact signatures. Additional methods are allowed, but the implemented methods must match the interface definition precisely.

Correct Option:

Codeunit "Discount Mgmt." compiles successfully. – No

The codeunit will NOT compile successfully. The method signature for GetDiscount() in the implementation (line 20) includes an extra parameter name "DiscountAmount" after the return type. The correct syntax should be procedure GetDiscount(): Decimal. Additionally, the parameter name in GetLine() is "VariantLine" but the interface expects "Line". While parameter names are not required to match, the syntax error in GetDiscount() will cause compilation failure.

Incorrect Options:

VariantLine in line 17 must be changed to Line and the DiscountAmount removed for the codeunit to compile. – Yes

This statement is partially accurate. The VariantLine parameter name does not need to match the interface name; parameter names are not validated for equality. However, the DiscountAmount after the return type in line 20 is invalid syntax and must be removed. The statement correctly identifies the DiscountAmount issue, though the parameter name requirement is technically incorrect.

The DiscountIsValid method must be defined in the interface for the code to compile. – No

This statement is false. A codeunit implementing an interface may contain additional methods beyond those defined in the interface. The DiscountIsValid method is an extra method not defined in the interface, and this is perfectly valid. Only the methods defined in the interface must be implemented; additional methods are optional and allowed.

Reference:

Microsoft Learn: Interfaces in AL

A company has a task that is performed infrequently. Users often need to look up the

procedure to complete the task.

The company requires a wizard that leads users through a sequence of steps to complete

the task.

You need to create the page to enable the wizard creation.

Which page type should you use?

A. NavigatePage

B. Card

C. RoleCenter

D. List

Explanation:

In Business Central, a wizard that guides users through a sequence of steps to complete a task is implemented using a NavigatePage. This page type is specifically designed for assisted setup wizards and guided task flows, providing a step-by-step interface with navigation controls like Next, Back, and Finish.

Correct Option:

A. NavigatePage –

NavigatePage is the correct page type for creating wizards and assisted setup experiences in Business Central. It provides a structured layout with instructional text, step indicators, and navigation buttons. This page type is optimized for infrequent, procedure-driven tasks where users need guidance through a defined sequence of steps.

Incorrect Options:

B. Card –

A Card page is used to view and edit a single record, such as a customer or item. It is not designed for multi-step wizard workflows. Card pages lack the built-in step navigation and instructional framework required for guided procedures.

C. RoleCenter –

A RoleCenter page serves as a personalized dashboard for a specific user role. It displays KPIs, charts, and activity tiles. It is not intended for step-by-step task completion wizards and cannot provide sequential guided navigation.

D. List –

A List page displays multiple records in a tabular format for viewing and selection. It is used for browsing, filtering, and opening individual records. List pages do not support the wizard-style, step-by-step interaction pattern.

Reference:

Microsoft Learn: Page Types in Business Central

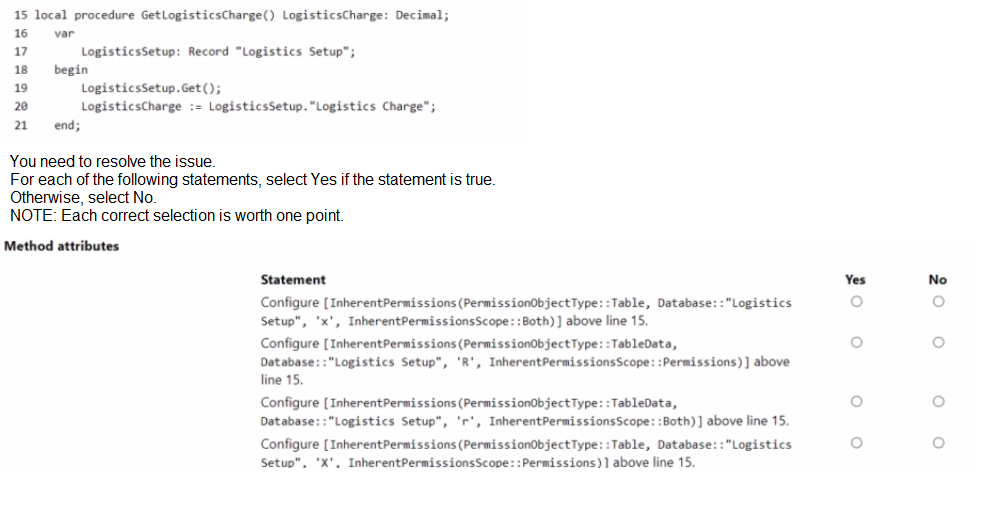

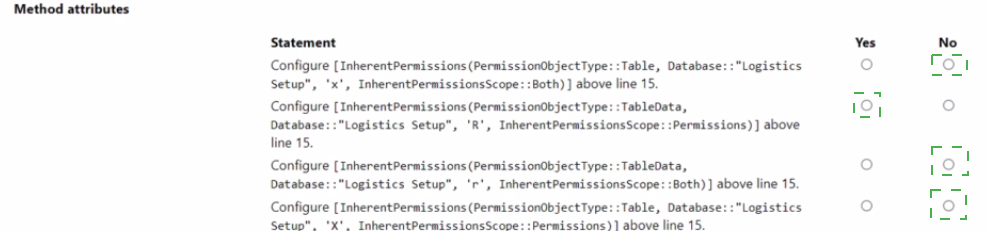

A company uses Business Central. The company has branches in different cities.

A worker reports that each time they generate a daily summary report they get an error

message that they do not have permissions.

Explanation:

The error occurs because the user lacks read permissions on the Logistics Setup table. The procedure GetLogisticsCharge reads the Logistics Charge field from this table. To resolve this without assigning full table permissions, the InherentPermissions attribute can grant specific permissions to the method. The correct attribute must specify TableData, read permission ('r'), and the appropriate scope.

Correct Option:

Configure [InherentPermissions(PermissionObjectType::TableData, Database::"Logistics Setup", 'r', InherentPermissionsScope::Both)] above line 15. – Yes

This statement is correct. InherentPermissions grants the specified permissions to the method without requiring explicit user assignment.

PermissionObjectType::TableData targets data permissions, and 'r' grants read access, which is exactly what this method needs. InherentPermissionsScope::Both applies the permission to both current and future versions, ensuring long-term compatibility.

Incorrect Options:

Configure [InherentPermissions(PermissionObjectType::Table, Database::"Logistics Setup", 'x', InherentPermissionsScope::Both)] above line 15.– No

This statement is incorrect. PermissionObjectType::Table is used for table metadata permissions, not data access. Additionally, 'x' represents execute permission, which is not applicable to tables and does not grant read access to table data. This would not resolve the permission error.

Configure [InherentPermissions(PermissionObjectType::Table, Database::"Logistics Setup", 'x', InherentPermissionsScope::Permissions)] above line 15.– No

This statement is incorrect for multiple reasons. First, Table instead of TableData targets metadata, not data. Second, 'x' does not grant read access. Third, InherentPermissionsScope::Permissions only applies to the permission set itself, not the object, making it insufficient for granting runtime access to table data.

Reference:

Microsoft Learn: InherentPermissions Attribute

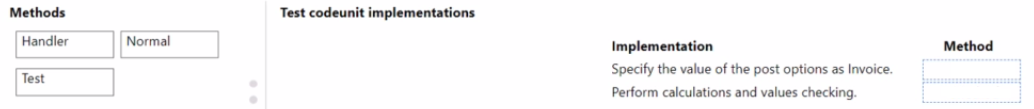

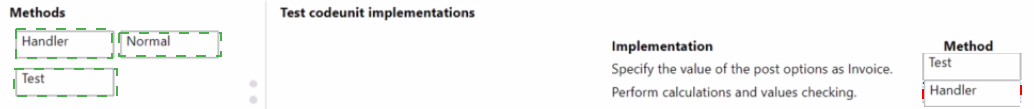

You are developing a test application to test the posting process of a sales order. You must

provide the following implementation:

• Specify the value of post options (dialog: Ship, Invoice, Ship & Invoice) as Invoice.

• Perform calculations and values checking.

You need to complete the development of the test codeunit.

Which methods should you use? To answer, move the appropriate methods to the correct

implementation. You may use each method once, more than once, or not at all. You may

need to move the split bar between panes or scroll to view content.

NOTE: Each correct selection is worth one point.

Explanation:

To test the sales order posting process in Business Central, you need to control the Post dialog options programmatically and validate the results. The Library – Sales codeunit provides methods to handle posting dialogs and retrieve posted documents. SetPostingOption sets the dialog selection to Invoice. GetPostedSalesInvoiceHeader retrieves the posted invoice for validation. Assert methods verify expected values and results.

Correct Option:

Specify the value of the post options (dialog: Ship, Invoice, Ship & Invoice) as Invoice – SetPostingOption

The SetPostingOption method from the Library – Sales codeunit allows the test to programmatically select the posting option (Ship, Invoice, or Ship & Invoice) without user interaction. Setting this to Invoice ensures the test runs unattended and consistently posts only the invoice portion of the sales order.

Perform calculations and values checking – Assert methods and GetPostedSalesInvoiceHeader

To perform calculations and value checking, the test must retrieve the posted document using GetPostedSalesInvoiceHeader from the Library – Sales codeunit. Once retrieved, Assert methods (such as Assert.AreEqual, Assert.IsTrue) are used to validate field values, totals, VAT, and other calculated amounts against expected results.

Incorrect Options:

Handler methods –

Handler methods (such as ModalPageHandler, MessageHandler) are used to handle UI interactions and confirmations during test execution. While useful for dismissing dialogs, they are not required here because SetPostingOption eliminates the dialog entirely. They do not perform posting or validation.

Normal Test methods –

Normal test methods execute the test logic itself but do not specifically control the posting option or retrieve posted documents. They would call SetPostingOption and validation methods but are not the direct answer to the two implementation requirements listed.

Reference:

Microsoft Learn: Testing the Application with Test Codeunits

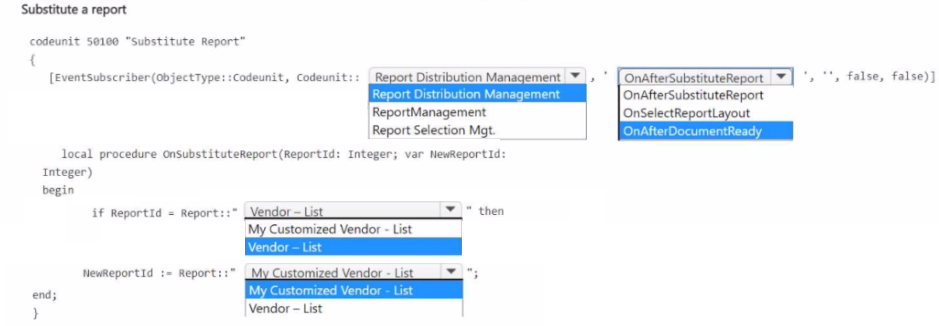

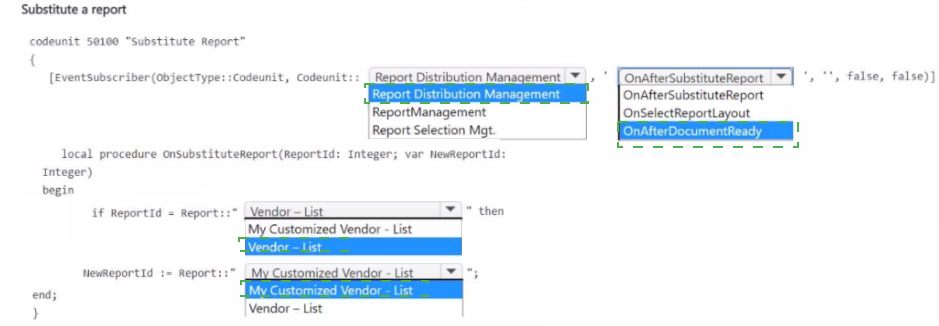

A company uses a Vendor-List report from the Base Application.

The company has new requirements that cannot be met by extending the Vendor - List

report.

You create a new report named My Customized Vendor - List.

You need to replace the Vendor - List report with My Customized Vendor - List.

How should you complete the code segment? To answer, select the appropriate options in

the answer area.

NOTE Each correct selection is worth one point.

Explanation:

To replace a standard report with a customized version in Business Central, you subscribe to the OnAfterSubstituteReport event in Codeunit Report Distribution Management. This event allows you to change the ReportId parameter to your custom report ID. The subscriber must be placed in a codeunit with the correct event subscriber syntax.

Correct Option:

First dropdown (EventSubscriber object type): Codeunit

The event is published in a codeunit, specifically Codeunit::Report Distribution Management. Event subscribers must specify ObjectType::Codeunit when subscribing to codeunit events.

Second dropdown (EventSubscriber ID/name): Report Distribution Management

This is the exact codeunit name or ID where the OnAfterSubstituteReport event is defined. The subscriber must reference this codeunit to hook into the report substitution event.

Third dropdown (Method parameter): var NewReportId: Integer

The OnAfterSubstituteReport event includes a var NewReportId: Integer parameter. By changing this value, you redirect the report request to your custom report ID. The condition checks ReportId and assigns NewReportId to your custom report.

Fourth dropdown (Assignment): Report::"My Customized Vendor - List"

Inside the if block, you assign the NewReportId parameter to the ID of your custom report. Using Report:: with the report name is the correct syntax to reference a report object in AL.

Incorrect Options:

EventSubscriber object type: Page, Query, XmlPort –

The event originates from a codeunit, not these object types. Subscribing to codeunit events requires ObjectType::Codeunit.

EventSubscriber ID/name: ReportManagement, Report Selection Mgt. –

These are related to reporting but are not the correct codeunit that publishes the OnAfterSubstituteReport event. The correct source is Report Distribution Management.

Method parameter: ReportId: Integer – This is an input parameter, not a var parameter. Changing it has no effect outside the method. The substitution requires modifying the var NewReportId parameter.

Assignment: "Vendor - List" –

Assigning the original report ID does not achieve substitution. The goal is to replace it with the customized version, not keep the original.

Reference:

Microsoft Learn: Substituting Reports

A company uses Azure Application Insights for Business Central online in its production

environment.

A user observes that some job queues go into the failed state and require manual

intervention.

You need to analyze job queue lifecycle telemetry.

How should you complete the code segment? To answer, select the appropriate options in

the answer area.

NOTE: Each correct selection is worth one point.

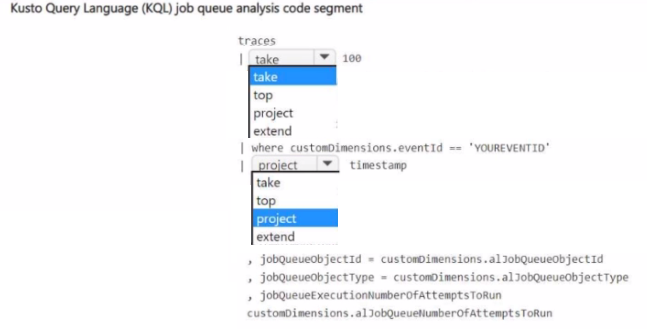

Explanation:

This Kusto Query Language (KQL) question tests your ability to filter and project telemetry data from Application Insights for Business Central job queue analysis. You need to filter traces for the specific job queue event ID, then project relevant timestamp and job queue custom dimensions for troubleshooting.

Correct Option:

First dropdown: where

The first blank requires filtering the traces table to only rows where customDimensions.eventId equals 'YOUREVENTID'. The where operator is used to filter records based on a condition. This isolates job queue lifecycle events from other telemetry traces.

Second dropdown: project

After filtering, you need to select specific columns to display. The project operator selects a subset of columns. Here you need timestamp, jobQueueObjectId, jobQueueObjectType, and jobQueueExecutionNumberOfAttemptsToRun from customDimensions.

Incorrect Options:

take – take returns the specified number of random rows. It is not used for filtering by condition. Using take before where could exclude relevant events. It belongs after filtering to limit sample size.

top – top returns the first N rows sorted by a specified column. It requires an order by clause and is not appropriate for simple event filtering or column selection.

extend – extend creates calculated columns or aliases. While you might extend custom dimension fields for readability, the primary operation needed here is column selection, which is project.

Reference:

Microsoft Learn: Analyzing Business Central Telemetry with KQL

You need to allow debugging in an extension to view the source code. In which file should you specify the value of the allowDebugging property?

A. settings.json

B. rad.json

C. app.json

D. launchjson

Explanation:

In Business Central AL development, extension properties such as resource exposure, dependencies, and debugging permissions are defined in the app.json manifest file. The allowDebugging property controls whether the extension can be debugged and its source code viewed. This property must be set to true in app.json to enable source code debugging.

Correct Option:

C. app.json

The app.json file is the application manifest for an AL extension. It contains critical metadata including ID, name, publisher, version, dependencies, and runtime settings. The "allowDebugging" property is set at the root level of this file. When set to true, it permits attaching a debugger and viewing the extension's source code during a debugging session.

Incorrect Options:

A. settings.json –

The settings.json file configures the AL Language extension and user-specific IDE behavior in Visual Studio Code. It controls compiler options, code analysis rules, and formatting preferences. It does not contain extension packaging or permission properties like allowDebugging.

B. rad.json –

There is no standard file named rad.json in Business Central AL development. This is a distractor option. The correct manifest file for extension configuration is always app.json.

D. launch.json –

The launch.json file configures debugging launch profiles in VS Code. It specifies server instances, startup objects, and attachment types. It controls how debugging is launched, but cannot grant permission to view source code—that permission is set in the extension manifest (app.json).

Reference:

Microsoft Learn: JSON Files in AL

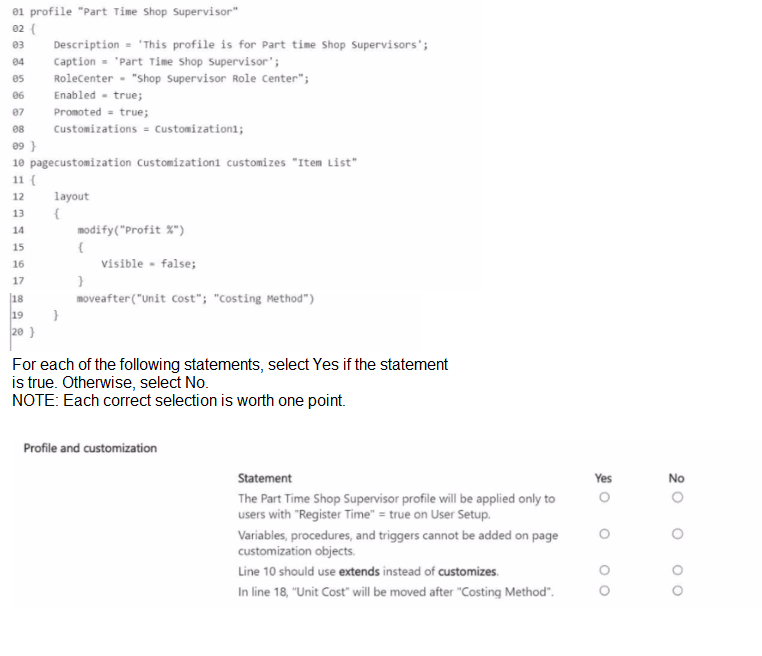

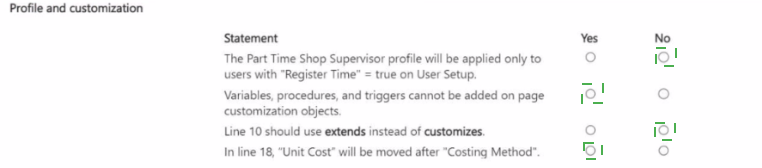

A developer creates a profile for part-time shop supervisors and adds customizations.

You plan to add new requirements to the profile.

You need to analyze the code to understand the profile and make sure there are no errors.

Explanation:

This question tests your understanding of Profile and PageCustomization objects in AL. Profiles define user roles and role centers. PageCustomization objects modify page layouts without extending the page object itself. They have specific syntax rules and limitations.

Correct Option:

Variables, procedures, and triggers cannot be added on page customization objects. – Yes

PageCustomization objects are strictly declarative. They can only modify layout and actions of an existing page using modify, move, moveafter, movebefore, remove, and addafter/addbefore. They cannot contain AL code such as variables, procedures, triggers, or event subscribers. For code, you must use Page Extensions.

Incorrect Options:

The Part Time Shop Supervisor profile will be applied only to users with "Register Time" = true on User Setup. – No

There is no connection between profile assignment and the "Register Time" field on User Setup. Profiles are assigned to users manually by an administrator or via user groups. The "Register Time" field controls time sheet registration, not profile visibility or assignment.

Line 10 should use extends instead of customizes. – No

PageCustomization objects correctly use the customizes keyword to target an existing page. The extends keyword is used for Page Extensions, which add new controls or logic. PageCustomization is specifically for modifying layout without extending the page object itself, so customizes is correct.

In line 18, "Unit Cost" will be moved after "Costing Method". – No

The moveafter syntax is moveafter(ReferenceField; TargetField). In line 18, "Unit Cost" is the target field to move, and "Costing Method" is the reference field it should appear after. This will move "Costing Method" after "Unit Cost", not the opposite. The statement reverses the intended effect.

Reference:

Microsoft Learn: Profile Object

| Page 2 out of 12 Pages |