Topic 3: Misc. Questions Set

You have a Fabric workspace named Workspace1 that contains a data pipeline named

Pipeline1 and a lakehouse named Lakehouse1.

You have a deployment pipeline named deployPipeline1 that deploys Workspace1 to

Workspace2.

You restructure Workspace1 by adding a folder named Folder1 and moving Pipeline1 to

Folder1.

You use deployPipeline1 to deploy Workspace1 to Workspace2.

What occurs to Workspace2?

A. Folder1 is created, Pipeline1 moves to Folder1, and Lakehouse1 is deployed.

B. Only Pipeline1 and Lakehouse1 are deployed.

C. Folder1 is created, and Pipeline1 and Lakehouse1 move to Folder1.

D. Only Folder1 is created and Pipeline1 moves to Folder1.

Explanation:

This question tests the behavior of Fabric deployment pipelines when the source workspace structure changes. Deployment pipelines promote artifacts and their configurations from a source (Workspace1) to a target (Workspace2), performing a merge operation. The process is designed to synchronize the target with the source, handling new items and structural changes.

Correct Option:

A. Folder1 is created, Pipeline1 moves to Folder1, and Lakehouse1 is deployed. -

This is correct because deployment pipelines deploy all content from the source workspace that is selected for deployment. Since Pipeline1 was moved into the new Folder1 in the source, the deployment will create the folder structure and move the pipeline within the target workspace. Lakehouse1, which was unchanged and part of the deployment scope, is also redeployed, ensuring the target matches the source's current state.

Incorrect Options:

B. Only Pipeline1 and Lakehouse1 are deployed. -

This is incorrect because it ignores the new Folder1 created in the source workspace's structure. Deployment pipelines replicate the source's item organization, not just the individual artifacts. Failing to create Folder1 would not correctly reflect the restructured source.

C. Folder1 is created, and Pipeline1 and Lakehouse1 move to Folder1. -

This is incorrect because Lakehouse1 was not moved into Folder1 in the source workspace (Workspace1). Deployment pipelines synchronize the target to match the source's exact structure. Since the lakehouse remained at the workspace root, it will be deployed to the root in the target workspace, not moved into the new folder.

D. Only Folder1 is created and Pipeline1 moves to Folder1.-

This is incorrect because it omits the deployment of Lakehouse1. Deployment pipelines deploy all selected items from the source. Unless Lakehouse1 was explicitly removed from the deployment pipeline's configuration, it is part of the deployment scope and will be updated in the target workspace.

Reference:

Microsoft Learn documentation on "Deployment pipelines in Microsoft Fabric", specifically the behavior of the merge operation during deployment, which updates the target workspace to reflect the current state of items in the source workspace.

You have a Fabric workspace named Workspace1.

You plan to configure Git integration for Workspacel by using an Azure DevOps Git

repository. An Azure DevOps admin creates the required artifacts to support the integration

of Workspacel Which details do you require to perform the integration?

A. the project, Git repository, branch, and Git folder

B. the organization, project. Git repository, and branch

C. the Git repository URL and the Git folder

D. the personal access token (PAT) for Git authentication and the Git repository URL

Explanation:

This question tests your understanding of the specific connection details needed to link a Fabric workspace to a Git repository in Azure DevOps. The integration setup is performed from within the Fabric portal and requires you to point to the exact location of your source code. An admin creating the required artifacts means the repository and permissions are already in place.

Correct Option:

B. the organization, project, Git repository, and branch. -

This is correct. To establish the Git integration, you must navigate the Azure DevOps hierarchy precisely. You need the Organization (e.g., contoso), the specific Project within it, the Repository name within that project, and the target Branch (typically main or workspace). The Fabric UI will prompt for these details to locate the source code.

Incorrect Options:

A. the project, Git repository, branch, and Git folder. -

This is incorrect because it omits the Organization, which is the top-level container in Azure DevOps. Without it, Fabric cannot locate the project. The "Git folder" is not a required detail for the initial connection; it's the destination path within the repository, which you specify after the core connection is made.

C. the Git repository URL and the Git folder. -

This is incorrect. While a repository URL contains the organization and project details, the standard Fabric Git integration setup wizard does not ask for a raw URL as a primary input. It uses a structured form asking for the organization, project, repo, and branch separately. The Git folder is, again, a secondary configuration.

D. the personal access token (PAT) for Git authentication and the Git repository URL. -

This is incorrect. Authentication (like a PAT) is required, but the question asks for the details needed "to perform the integration," which implies the target location details. The PAT is an authentication credential, not a location detail for the repository. The URL is also not the standard input method for the wizard.

Reference:

Microsoft Learn module "Source control integration in Microsoft Fabric" details the setup process, which requires selecting the Azure DevOps organization, project, repository, and branch from dropdown lists within the Fabric workspace settings.

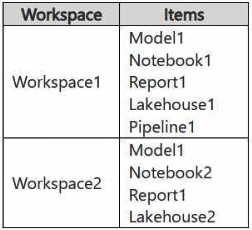

You have two Fabric workspaces named Workspace1 and Workspace2.

You have a Fabric deployment pipeline named deployPipeline1 that deploys items from

Workspace1 to Workspace2. DeployPipeline1 contains all the items in Workspace1.

You recently modified the items in Workspaces1.

The workspaces currently contain the items shown in the following table.

Items in Workspace1 that have the same name as items in Workspace2 are currently

paired.

You need to ensure that the items in Workspace1 overwrite the corresponding items in

Workspace2. The solution must minimize effort.

What should you do?

A. Delete all the items in Workspace2, and then run deployPipeline1.

B. Rename each item in Workspace2 to have the same name as the items in Workspace1.

C. Back up the items in Workspace2, and then run deployPipeline1.

D. Run deployPipeline1 without modifying the items in Workspace2.

Explanation:

This question tests your understanding of the default behavior when running a deployment pipeline where source and target items are already paired. The scenario states items with the same name are currently paired. The goal is to overwrite target items with the modified source versions. Deployment pipelines are designed for this incremental update workflow.

Correct Option:

D. Run deployPipeline1 without modifying the items in Workspace2. -

This is correct. When items are already paired between workspaces, running the deployment pipeline performs an update operation. The modified items from Workspace1 (Model1, Report1, Lakehouse1, Pipeline1, Notebook1) will overwrite their paired counterparts in Workspace2, satisfying the requirement. This minimizes effort as it requires a single pipeline run with no manual pre-work.

Incorrect Options:

A. Delete all the items in Workspace2, and then run deployPipeline1. -

This is incorrect and excessive. Deleting items would break the existing pairings. The deployment would then create new items, which is functionally similar but requires more manual effort and could cause downtime or loss of historical lineage/data, which is not necessary.

B. Rename each item in Workspace2 to have the same name as the items in Workspace1. -

This is incorrect and redundant. The table shows that matching items (Model1, Report1) already have the same name and are paired. Renaming items like Lakehouse2 to Lakehouse1 would be a manual, error-prone process that is completely unnecessary. The unpaired item (Notebook2) is not in the source and will not be affected by the deployment.

C. Back up the items in Workspace2, and then run deployPipeline1. -

This is incorrect. While backing up is a good precaution, it is not the action needed to meet the technical requirement of overwriting items. The deployment itself will handle the overwrite. The question asks for the step "you should do" to achieve the overwrite, not for a risk mitigation step. Backing up adds effort without changing the deployment outcome.

Reference:

Microsoft Learn documentation on "Deployment pipelines in Microsoft Fabric" explains that deploying to a target workspace with existing paired items results in those items being updated with the source version, which is the standard and intended incremental deployment behavior.

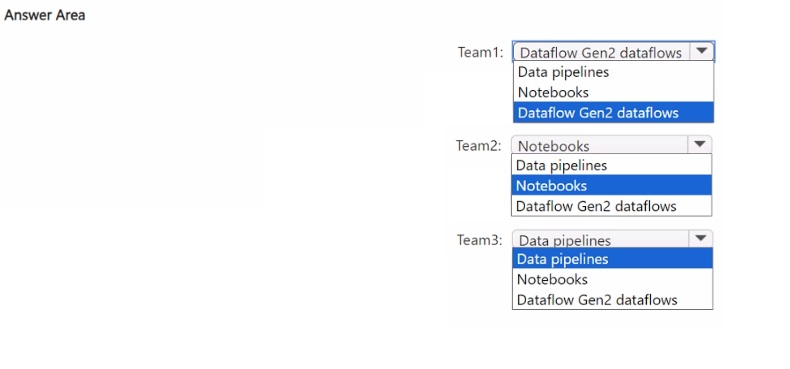

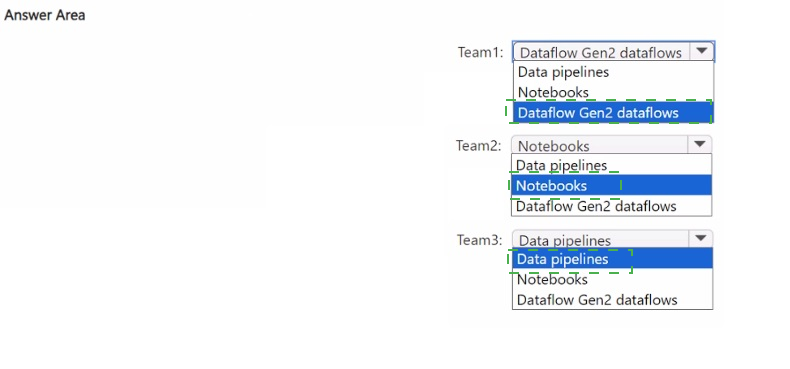

Your company has three newly created data engineering teams named Team1, Team2,

and Team3 that plan to use Fabric. The teams have the following personas:

• Team1 consists of members who currently use Microsoft Power BI. The team wants to

transform data by using by a low-code approach.

• Team2 consists of members that have a background in Python programming. The team

wants to use PySpark code to transform data.

• Team3 consists of members who currently use Azure Data Factory. The team wants to

move data between source and sink environments by using the least amount of effort.

You need to recommend tools for the teams based on their current personas.

What should you recommend for each team? To answer, select the appropriate options in

the answer area.

NOTE: Each correct selection is worth one point.

Explanation:

This question tests your ability to map specific user personas and their existing skills to the most appropriate low-code or code-first data engineering tool within Microsoft Fabric. The recommendation should align with the team's background to minimize the learning curve and leverage their existing expertise for productivity.

Correct Option:

Team1: Dataflow Gen2 -

Team 1 uses Power BI and wants a low-code approach. Dataflow Gen2 is the direct evolution of Power Query from Power BI, providing a familiar, intuitive graphical interface for data transformation. This allows them to apply their existing skills with minimal retraining.

Team2: Notebooks -

Team 2 has a Python programming background and wants to use PySpark. Notebooks in Fabric (backed by a Spark runtime) are the ideal, code-first environment for writing and executing PySpark code for complex data transformations, data science, and exploratory analysis.

Team3: Data pipelines -

Team 3 uses Azure Data Factory (ADF) and wants to move data with minimal effort. Data pipelines in Fabric are conceptually identical to ADF pipelines, sharing the same low-code authoring canvas and core concepts for orchestration and data movement. This provides a nearly seamless migration path.

Incorrect Options:

Recommending Data Pipelines for Team1 would be incorrect.

While data pipelines can include data flow activities, they are primarily for orchestration. Team1's core need is transformation using a familiar Power BI-like tool, which is Dataflow Gen2, not the broader orchestration tool.

Recommending Dataflow Gen2 for Team2 would be incorrect.

Team2's strength is writing PySpark code. Forcing them into a low-code graphical tool like Dataflow Gen2 would not leverage their coding skills and would be less efficient for complex programmatic transformations.

Recommending Notebooks for Team3 would be incorrect.

While notebooks can move data, they are not the optimal tool for simple, scheduled data copy operations. Team3's ADF background makes Data pipelines the natural fit for replicating their existing ETL/ELT patterns with the "least amount of effort."

Reference:

Microsoft Learn module "Get started with data engineering in Microsoft Fabric", which outlines the different tools and their primary user personas: Dataflow Gen2 for self-service data prep (Power Query), Notebooks for code-first development (Spark), and Data pipelines for orchestration and data movement (ADF).

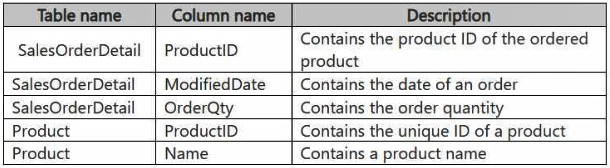

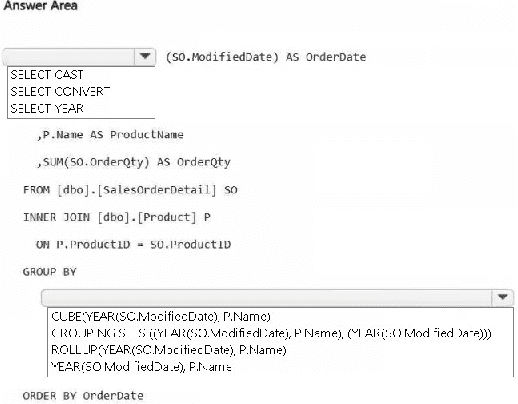

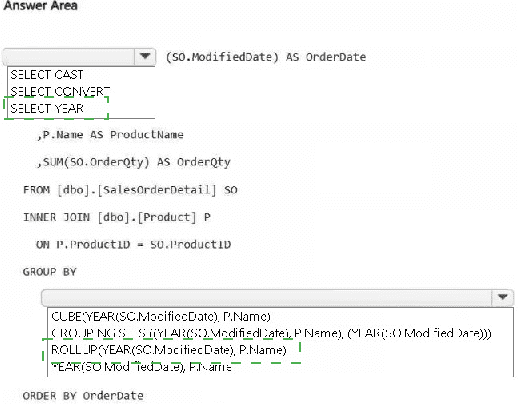

HOTSPOT

You have a Fabric workspace that contains a warehouse named DW1. DW1 contains the

following tables and columns.

You need to create an output that presents the summarized values of all the order

quantities by year and product. The results must include a summary of the order quantities

at the year level for all the products.

How should you complete the code? To answer, select the appropriate options in the

answer area.

NOTE: Each correct selection is worth one point.

Explanation:

This hotspot question tests knowledge of advanced grouping in T-SQL within a Microsoft Fabric warehouse. You must aggregate total order quantities (SUM of OrderQty) grouped by year (extracted from ModifiedDate) and product name, while also generating subtotals for each year across all products. This requires an extension to GROUP BY that produces hierarchical summaries (detail rows + year-level rollups) without needing UNION or multiple queries.

Correct Option:

First dropdown (for selecting the year function): SELECT YEAR (or SELECT YEAR(SO.ModifiedDate) AS OrderDate)

YEAR() extracts the year from the datetime column ModifiedDate, enabling grouping and display at the year level. This is the correct date-part function here (as opposed to CAST or CONVERT for string formatting, which isn't needed for grouping).

Second dropdown (for the grouping operator): ROLLUP(YEAR(SO.ModifiedDate), P.Name)

GROUP BY ROLLUP (year, product) generates:

Regular groups: SUM per year + per product.

Subtotal rows: SUM per year (across all products) — exactly matching the requirement for "summary of the order quantities at the year level for all the products".

(In some cases a grand total, but the main need is the year subtotal.)

ROLLUP is hierarchical and efficient for this scenario (year → product).

Incorrect Options:

Options involving CUBE: CUBE creates all possible combinations (year only, product only, both, and grand total), producing extra unwanted subtotals by product across all years, which isn't asked for.

Options with plain GROUP BY YEAR(SO.ModifiedDate), P.Name: This gives only detail rows (per year-per product) but misses the year-level summary across all products.

Options with GROUPING SETS (unless exactly mimicking ROLLUP): While GROUPING SETS((year, product), (year)) would work, the question's dropdowns typically show ROLLUP directly as the concise and correct choice.

Wrong date functions like CAST or CONVERT in the SELECT for grouping purposes: These are for formatting, not extraction for aggregation.

Reference:

Microsoft Learn: SELECT - GROUP BY clause (Transact-SQL) – ROLLUP section

https://learn.microsoft.com/en-us/sql/t-sql/queries/select-group-by-transact-sql?view=sql-server-ver17

DP-700 Study Guide: Implement and manage an analytics solution using Microsoft Fabric (T-SQL in Fabric warehouses supports standard T-SQL extensions like ROLLUP).

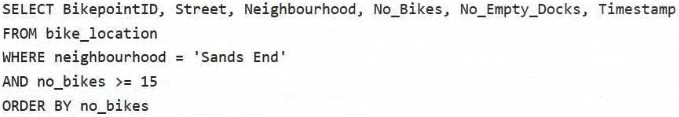

Note: This question is part of a series of questions that present the same scenario. Each

question in the series contains a unique solution that might meet the stated goals. Some

question sets might have more than one correct solution, while others might not have a

correct solution.

After you answer a question in this section, you will NOT be able to return to it. As a result,

these questions will not appear in the review screen.

You have a Fabric eventstream that loads data into a table named Bike_Location in a KQL

database. The table contains the following columns:

BikepointID

Street

Neighbourhood

No_Bikes

No_Empty_Docks

Timestamp

You need to apply transformation and filter logic to prepare the data for consumption. The

solution must return data for a neighbourhood named Sands End when No_Bikes is at

least 15. The results must be ordered by No_Bikes in ascending order.

Solution: You use the following code segment:

Does this meet the goal?

A. Yes

B. no

Explanation:

The task requires filtering Bike_Location data in a Fabric KQL (Kusto Query Language) database to show only rows where Neighbourhood = 'Sands End' and No_Bikes ≥ 15, then ordering the results by No_Bikes in ascending order. The provided code attempts this but contains multiple syntax errors that prevent it from executing correctly or producing the expected output in KQL. Therefore, the solution does not meet the goal.

Correct Option:

B. No

The code fails to meet the goal because:

The WHERE clause has invalid syntax: WHERE neighbourhood = 'Sands End' WHERE no_bikes >= 15 — KQL allows only one WHERE clause; multiple WHERE keywords are not permitted.

The condition no_bikes >= 15 = 'Sands End' is malformed (mixing string comparison with numeric).

Column names are inconsistently capitalized (e.g., No_Bikes vs no_bikes, Neighbourhood vs neighbourhood), and KQL is case-sensitive for column names.

ORDER BY is written twice incorrectly (ORDER BY no_bikes appears at the end but is duplicated/misplaced).

These syntax issues make the query invalid in KQL.

Incorrect Option:

A. Yes

This is incorrect because the provided KQL code segment contains critical syntax errors and will not run successfully in a Fabric Real-Time Intelligence (KQL database / eventstream transformation). It does not correctly filter for Neighbourhood = 'Sands End' with No_Bikes ≥ 15, nor does it guarantee proper ascending order by No_Bikes. A valid KQL query would look like:

Reference:

Microsoft Learn: Kusto Query Language (KQL) syntax – WHERE operator, order by operator

https://learn.microsoft.com/en-us/azure/data explorer/kusto/query/whereoperatorhttps://learn.microsoft.com/en-us/azure/data-explorer/kusto/query/orderbyoperator

Microsoft Fabric – Real-Time Intelligence: Query data in a KQL database

https://learn.microsoft.com/en-us/fabric/real-time-intelligence/kql-database

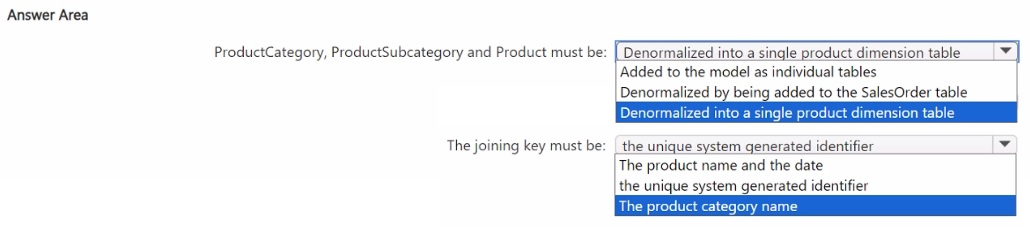

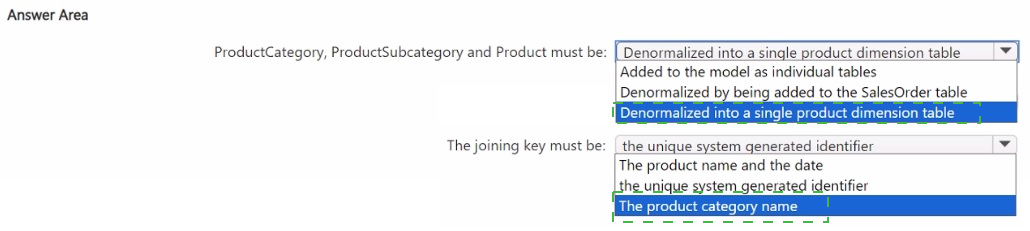

You have a Fabric warehouse named DW1 that contains four staging tables named

ProductCategory, ProductSubcategory, Product, and SalesOrder. ProductCategory,

ProductSubcategory, and Product are used often in analytical queries.

You need to implement a star schema for DW1. The solution must minimize development

effort.

Which design approach should you use? To answer, select the appropriate options in the

answer area.

NOTE: Each correct selection is worth one point.

Explanation:

This question tests your understanding of star schema design principles in a data warehouse, focusing on dimension table structure and the goal of minimizing development effort. The tables ProductCategory, ProductSubcategory, and Product form a natural hierarchy and are frequently queried together.

Correct Option:

ProductCategory, ProductSubcategory and Product must be: Denormalized into a single product dimension table

The joining key must be: the unique system generated identifier

Why this is correct:

Denormalized into a single product dimension table:

This is the standard star schema approach for related hierarchical attributes (like product categories). Flattening them into one DimProduct table simplifies the model for end-users, improves query performance by reducing joins, and meets the requirement to minimize development effort for future analytical queries.

The joining key must be:

the unique system generated identifier: In a star schema, the fact table (SalesOrder) joins to the dimension table using a surrogate key (a single, unique integer ID, typically an identity column). This is the most efficient join for both storage and query performance. The "unique system generated identifier" refers to this surrogate key in the dimension table.

Incorrect Options:

For Table Structure:

Added to the model as individual tables:

This creates a snowflake schema, which normalizes the data. While valid, it increases development effort for report writers who must now write queries with multiple joins, contradicting the requirement to minimize effort.

Denormalized by being added to the SalesOrder table:

This would denormalize product attributes directly into the fact table, severely bloating it with redundant text data. This violates dimensional modeling best practices, increases storage costs, and complicates updates.

For Joining Key:

The product name and the date:

This is incorrect. Using a composite key of a text field and a date is inefficient and unreliable (names can change). The standard, high-performance join in a star schema is a single integer surrogate key.

The product category name:

This is incorrect. Using a text-based business key (like a name) as a join key is less performant than an integer and is not the standard practice for the primary join between fact and dimension tables in a star schema.

Reference:

Dimensional modeling best practices, as covered in the Microsoft Learn learning path for data warehousing, advocate for denormalized dimension tables to create a star schema for usability and performance. The join between fact and dimension tables should use a surrogate key (a system-generated integer).

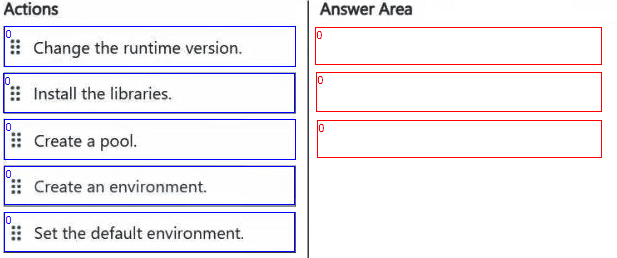

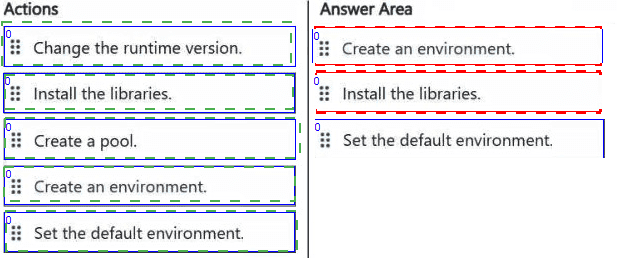

Your company has a team of developers. The team creates Python libraries of reusable

code that is used to transform data.

You create a Fabric workspace name Workspace1 that will be used to develop extract,

transform, and load (ETL) solutions by using notebooks.

You need to ensure that the libraries are available by default to new notebooks in

Workspace1.

Which three actions should you perform in sequence? To answer, move the appropriate

actions from the list of actions to the answer area and arrange them in the correct order.

Explanation:

This question tests the procedure for installing custom Python libraries and making them available by default to all new notebooks within a specific Fabric workspace. The solution must ensure the libraries are installed in a managed, reusable compute environment attached to the workspace.

Correct Option (Sequence):

Create an environment.

Install the libraries.

Set the default environment.

Why this sequence is correct:

Create an environment:

The first step is to create a custom Fabric environment. An environment is a container for specific Spark, Python, or R runtime versions and a set of installed libraries. This provides the isolated, configurable space where your custom libraries will reside.

Install the libraries:

Once the environment is created, you can install the team's custom Python libraries into it. This is done via the environment's management interface, where you can specify PyPI packages or upload wheel files.

Set the default environment:

The final, critical step is to designate this newly configured environment as the default environment for the workspace. This setting ensures that any new notebook created in Workspace1 will automatically attach to and use this environment, granting it immediate access to the installed libraries.

Incorrect / Unnecessary Actions:

Create a pool:

Creating a Spark pool (or Fabric capacity pool) is related to provisioning compute capacity, not to managing software dependencies. The runtime and libraries are defined at the environment level, not the pool level.

Change the runtime version:

This action is part of creating or editing an environment (Step 1). You select the Spark and Python runtime version when you create the environment. It is not a separate, sequential step in this high-level workflow.

Reference:

Microsoft Learn documentation on "Manage environments in Microsoft Fabric" outlines this workflow: create a custom environment, customize it by adding libraries, and then publish and set it as the default for a workspace to ensure all new notebooks inherit its configuration.

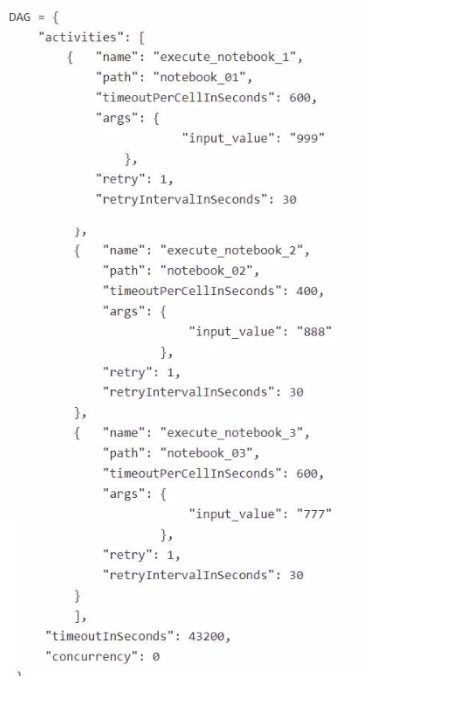

You are building a Fabric notebook named MasterNotebookl in a workspace.

MasterNotebookl contains the following code.

You need to ensure that the notebooks are executed in the following sequence:

1. Notebook_03

2. Notebook.Ol

3. Notebook_02

Which two actions should you perform? Each correct answer presents part of the solution.

NOTE: Each correct selection is worth one point.

A. Split the Directed Acyclic Graph (DAG) definition into three separate definitions.

B. Change the concurrency to 3.

C. Move the declaration of Notebook_03 to the top of the Directed Acyclic Graph (DAG) definition.

D. Move the declaration of Notebook_02 to the bottom of the Directed Acyclic Graph (DAG) definition.

E. Add dependencies to the execution of Note boo k_02.

F. Add dependencies to the execution of Notebook_03.

E. Add dependencies to the execution of Note boo k_02.

Explanation:

This question tests your understanding of orchestrating notebook execution within a parent notebook using a DAG (Directed Acyclic Graph). The activities list in the provided JSON defines the notebooks to run. By default, without explicit dependencies, notebooks execute concurrently when "concurrency" is non-zero (or set to 0, which means unlimited). To enforce a specific sequential order, you must define explicit dependencies between the activities.

Correct Options:

C. Move the declaration of Notebook_03 to the top of the Directed Acyclic Graph (DAG) definition.

E. Add dependencies to the execution of Notebook_02.

Why these actions are correct (together):

C. Move the declaration of Notebook_03 to the top:

In a Fabric notebook DAG, the execution order is not guaranteed by the list order. However, a logical first step for clarity and initial setup is to place the first notebook (notebook_03) at the top of the activities list. More importantly, this positioning makes it easier to reference when setting up dependencies for subsequent notebooks.

E. Add dependencies to the execution of Notebook_02:

This is the critical action. To enforce sequence (notebook_01 before notebook_02), you must add a dependsOn property to the notebook_02 activity definition, specifying that it depends on the successful completion of notebook_01. Similarly, to make notebook_01 run after notebook_03, you would add a dependsOn to notebook_01 referencing notebook_03. The modified DAG would use dependencies like: "dependsOn": ["execute_notebook_3"] inside notebook_01's activity, and "dependsOn": ["execute_notebook_1"] inside notebook_02's activity.

Incorrect Options:

A. Split the DAG definition into three separate definitions. -

Incorrect. A single DAG is designed to orchestrate multiple activities with dependencies. Splitting it defeats the purpose of having a controlled workflow within a single parent notebook execution.

B. Change the concurrency to 3. -

Incorrect. Setting "concurrency": 3 (or any value >1) explicitly allows parallel execution, which is the opposite of the required sequential order.

D. Move the declaration of Notebook_02 to the bottom of the Directed Acyclic Graph (DAG) definition. -

Incorrect. While this might seem logical for the final step, list order alone does not control execution sequence without explicit dependsOn properties. The DAG scheduler can still execute activities concurrently.

F. Add dependencies to the execution of Notebook_03. -

Incorrect. Notebook_03 is the first notebook in the required sequence. It should have no dependencies on the others within this DAG. Adding dependencies to it would prevent it from running first.

Reference:

Microsoft Learn documentation on "Orchestrate notebooks with the mssparkutils notebook utilities" specifies that to define a sequence, you must use the dependsOn property within the activity definition of a notebook in the DAG to declare which previous activities it must wait for. The list order itself does not guarantee execution order.

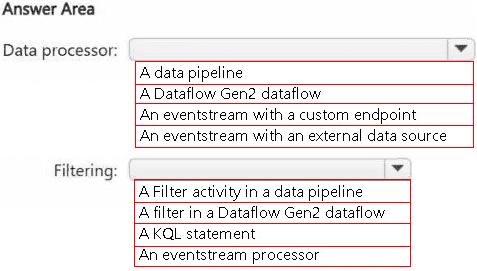

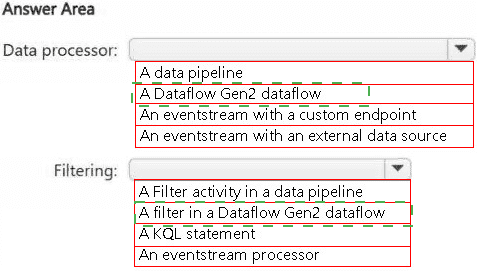

HOTSPOT

You have an Azure Event Hubs data source that contains weather data.

You ingest the data from the data source by using an eventstream named Eventstream1.

Eventstream1 uses a lakehouse as the destination.

You need to batch ingest only rows from the data source where the City attribute has a

value of Kansas. The filter must be added before the destination. The solution must

minimize development effort.

What should you use for the data processor and filtering? To answer, select the

appropriate options in the answer area.

NOTE: Each correct selection is worth one point.

Explanation:

This question tests the tool selection for a specific streaming ingestion scenario in Fabric. The data is ingested from Azure Event Hubs via an Eventstream, which is the native Fabric tool for real-time data ingestion and processing. The requirement is to filter data before it lands in the lakehouse, minimizing development effort.

Correct Option:

Data processor: An eventstream with an external data source

Filtering: An eventstream processor

Why this is correct:

Data processor:

An eventstream with an external data source - This is precisely the definition of Eventstream1 in the scenario. It is the core data processor for real-time ingestion from external sources like Event Hubs. Using a data pipeline or Dataflow Gen2 would be incorrect, as they are designed for batch processing, not direct streaming ingestion from Event Hubs.

Filtering:

An eventstream processor - Within an eventstream, you add processors between the source and destination to transform or filter data in real-time. Adding a filter processor allows you to apply a condition (e.g., where City == 'Kansas') directly on the stream before it writes to the lakehouse. This meets the "filter before the destination" requirement with minimal effort, using the eventstream's built-in, low-code UI.

Incorrect Options:

For Data Processor:

A data pipeline / A Dataflow Gen2 dataflow -

These are batch-oriented tools. While a data pipeline can have an Event Hubs source, it would use a batch read operation, not real-time streaming ingestion as implied by using an "eventstream." They would introduce unnecessary complexity.

An eventstream with a custom endpoint -

A custom endpoint is for sending data out of an eventstream, not for ingesting data from an external source like Event Hubs.

For Filtering:

A Filter activity in a data pipeline / A filter in a Dataflow Gen2 dataflow -

These apply filtering in batch jobs, not on the real-time stream before it lands. Using them would require the data to first land in the lakehouse (unfiltered), then a separate batch process to filter it, violating the "filter before the destination" requirement.

A KQL statement -

A KQL query is used to filter data after it has been ingested into a KQL database. The destination here is a lakehouse, not a KQL database. This would be the wrong tool for the configured destination.

Reference:

Microsoft Learn documentation on "Eventstreams in Microsoft Fabric" explains that an eventstream ingests data from external streaming sources and that you can add processors (like filter, transform, or aggregate) to modify the data flow before it reaches a destination like a lakehouse.

| Page 2 out of 10 Pages |