Free Microsoft DP-600 Practice Test Questions MCQs

Stop wondering if you're ready. Our Microsoft DP-600 practice test is designed to identify your exact knowledge gaps. Validate your skills with Implementing Analytics Solutions Using Microsoft Fabric questions that mirror the real exam's format and difficulty. Build a personalized study plan based on your free DP-600 exam questions mcqs performance, focusing your effort where it matters most.

Targeted practice like this helps candidates feel significantly more prepared for Implementing Analytics Solutions Using Microsoft Fabric exam day.

2500+ already prepared

Updated On : 3-Mar-202650 Questions

Implementing Analytics Solutions Using Microsoft Fabric

4.9/5.0

Litware. Inc. Case Study

Overview

Litware. Inc. is a manufacturing company that has offices throughout North America. The analytics team at Litware contains data engineers, analytics engineers, data analysts, and data scientists.

Existing Environment

litware has been using a Microsoft Power Bl tenant for three years. Litware has NOT enabled any Fabric capacities and features.

Fabric Environment

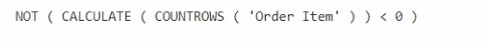

Litware has data that must be analyzed as shown in the following table.

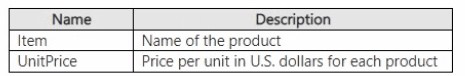

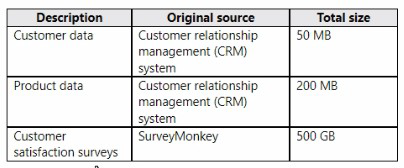

The Product data contains a single table and the following columns.

The customer satisfaction data contains the following tables:

• Survey

• Question

• Response

For each survey submitted, the following occurs:

• One row is added to the Survey table.

• One row is added to the Response table for each question in the survey.

The Question table contains the text of each survey question. The third question in each survey response is an overall satisfaction score. Customers can submit a survey after each purchase.

User Problems

The analytics team has large volumes of data, some of which is semi-structured. The team wants to use Fabric to create a new data store.

Product data is often classified into three pricing groups: high, medium, and low. This logic is implemented in several databases and semantic models, but the logic does NOT always match across implementations.

Planned Changes

Litware plans to enable Fabric features in the existing tenant. The analytics team will createa new data store as a proof of concept (PoC). The remaining Litware users will only get access to the Fabric features once the PoC is complete. The PoC will be completed by using a Fabric trial capacity.

The following three workspaces will be created:

• AnalyticsPOC: Will contain the data store, semantic models, reports, pipelines, dataflows, and notebooks used to populate the data store

• DataEngPOC: Will contain all the pipelines, dataflows, and notebooks used to populate Onelake

• DataSciPOC: Will contain all the notebooks and reports created by the data scientists The following will be created in the AnalyticsPOC workspace:

• A data store (type to be decided)

• A custom semantic model

• A default semantic model

• Interactive reports

The data engineers will create data pipelines to load data to OneLake either hourly or daily depending on the data source. The analytics engineers will create processes to ingest transform, and load the data to the data store in the AnalyticsPOC workspace daily.

Whenever possible, the data engineers will use low-code tools for data ingestion. The choice of which data cleansing and transformation tools to use will be at the data engineers' discretion.

All the semantic models and reports in the Analytics POC workspace will use the data store as the sole data source.

Technical Requirements

The data store must support the following:

• Read access by using T-SQL or Python

• Semi-structured and unstructured data

• Row-level security (RLS) for users executing T-SQL queries

Files loaded by the data engineers to OneLake will be stored in the Parquet format and will meet Delta Lake specifications.

Data will be loaded without transformation in one area of the AnalyticsPOC data store. The data will then be cleansed, merged, and transformed into a dimensional model.

The data load process must ensure that the raw and cleansed data is updated completely before populating the dimensional model.

The dimensional model must contain a date dimension. There is no existing data source for the date dimension. The Litware fiscal year matches the calendar year. The date dimension must always contain dates from 2010 through the end of the current year.

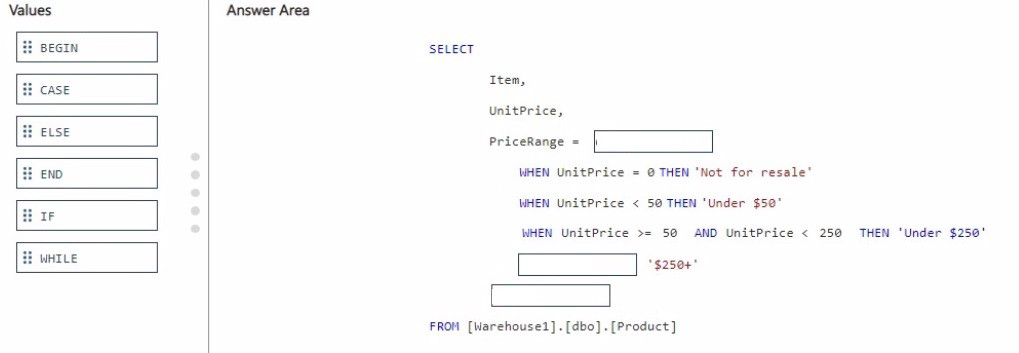

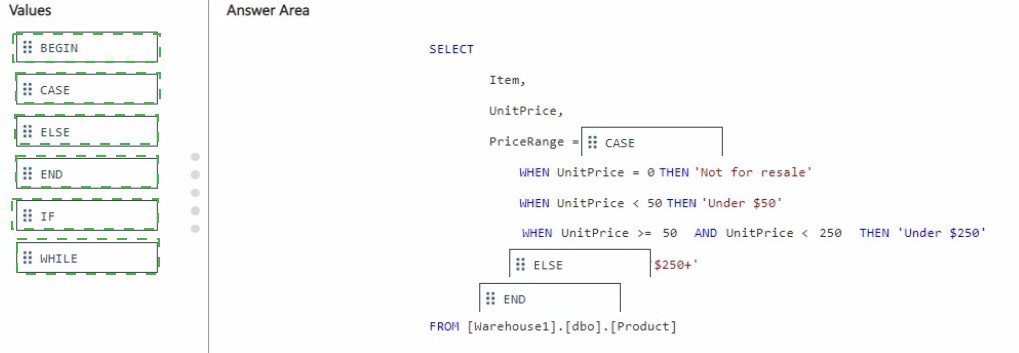

The product pricing group logic must be maintained by the analytics engineers in a single location. The pricing group data must be made available in the data store for T-SQL queries and in the default semantic model. The following logic must be used:

• List prices that are less than or equal to 50 are in the low pricing group.

• List prices that are greater than 50 and less than or equal to 1,000 are in the medium pricing group.

• List pnces that are greater than 1,000 are in the high pricing group.

Security Requirements

Only Fabric administrators and the analytics team must be able to see the Fabric items created as part of the PoC. Litware identifies the following security requirements for the Fabric items in the AnalyticsPOC workspace:

• Fabric administrators will be the workspace administrators.

• The data engineers must be able to read from and write to the data store. No access must be granted to datasets or reports.

• The analytics engineers must be able to read from, write to, and create schemas in the data store. They also must be able to create and share semantic models with the data analysts and view and modify all reports in the workspace.

• The data scientists must be able to read from the data store, but not write to it. They will access the data by using a Spark notebook.

• The data analysts must have read access to only the dimensional model objects in the data store. They also must have access to create Power Bl reports by using the semantic models created by the analytics engineers.

• The date dimension must be available to all users of the data store.

• The principle of least privilege must be followed.

Both the default and custom semantic models must include only tables or views from the dimensional model in the data store. Litware already has the following Microsoft Entra security groups:

• FabricAdmins: Fabric administrators

• AnalyticsTeam: All the members of the analytics team

• DataAnalysts: The data analysts on the analytics team

• DataScientists: The data scientists on the analytics team

• Data Engineers: The data engineers on the analytics team

• Analytics Engineers: The analytics engineers on the analytics team

Report Requirements

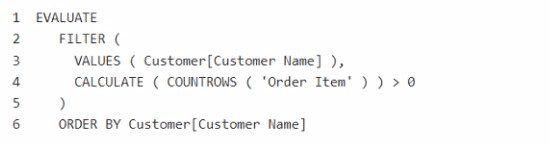

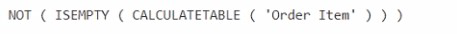

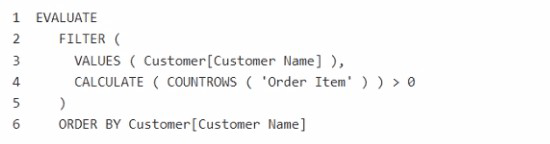

The data analysis must create a customer satisfaction report that meets the following requirements:

• Enables a user to select a product to filter customer survey responses to only those who have purchased that product

• Displays the average overall satisfaction score of all the surveys submitted during the last 12 months up to a selected date

• Shows data as soon as the data is updated in the data store

• Ensures that the report and the semantic model only contain data from the current and previous year

• Ensures that the report respects any table-level security specified in the source data store

• Minimizes the execution time of report queries

| Page 1 out of 5 Pages |

Implementing Analytics Solutions Using Microsoft Fabric Practice Exam Questions

Winning Strategy for DP-600: Analytics Solutions in Microsoft Fabric

Core Mindset: Architect End-to-End Analytics

The DP-600 validates your ability to design, build, and manage enterprise-scale analytics solutions using Microsoft Fabric. This is not a single-tool exam; it’s about integrating components into a cohesive analytics pipeline.

Phase 1: Master the Pillars of Fabric Analytics

Focus on the four core pillars, weighted heavily in the exam:

Data Engineering & Preparation (30%): Your ability to build a Lakehouse with Delta format and use Notebooks (PySpark/SQL) for transformation is fundamental. This feeds the rest of the analytics pipeline.

Data Modeling & Warehousing (30%): You must expertly use the Fabric Data Warehouse (T-SQL) to create efficient, denormalized models (star schema) and write analytical queries. This is the heart of the exam.

Data Visualization with Power BI (25%): This tests your Data Engineering role in preparing data for Power BI. Know how to create semantic models from Lakehouses/Warehouses, set up Direct Lake mode, and manage relationships and measures.

Administration & Monitoring (15%): Know how to manage workspaces, monitor pipeline performance, and govern data using OneLake and shortcuts.

Phase 2: The 5-Week Execution Blueprint

Week 1-2: Hands-On Fabric Foundation

Immediately secure a Fabric Trial Capacity.

Don’t watch videos first. Go build: Create a Lakehouse, ingest data, write a Spark transformation, and connect a Warehouse. Use the official Microsoft Learn modules as your guide.

Understand the critical difference between a Lakehouse (default Delta tables, Spark-based) and a Warehouse (T-SQL, decoupled storage).

Week 3-4: Integrate the Pipeline & Practice Scenarios

This is the most crucial phase. Build a complete analytics pipeline from start to finish:

Pipeline Activity → Notebook (to transform) → Lakehouse (to store) → Warehouse (to model) → Semantic Model → Power BI Report.

Use platforms like MSMCQ.com for targeted, scenario-based DP-600 practice questions. Implementing Analytics Solutions Using Microsoft Fabric questions will test your decision-making: Should you use a Lakehouse shortcut or a Warehouse view for this use case? Analyze every explanation.

Week 5: Deep Dive & Final Simulation

Master Direct Lake Mode: Know its benefits over Import/DirectQuery and its prerequisites.

Practice writing complex T-SQL for analytical queries and optimizing Spark performance.

Take timed, full-length Implementing Analytics Solutions Using Microsoft Fabric practice exam to build stamina and identify final weak spots.