Topic 6: Misc. Questions

You have an Azure virtual machine named VM1 that runs Windows Server 2022 and hosts a Microsoft SQL Server 2019 instance named SQL1. You need to configure SQL! to use mixed mode authentication. Which procedure should you run?

A. sp_addremoteIogin

B. xp_instance_regwrite

C. sp_cnarge_users_login

D. xp_grant_login

You have an Azure virtual machine named VM1 that runs Windows Server 2022 and hosts

a Microsoft SQL Server 2019 instance named SQL1.

You need to configure SQL1 to use mixed mode authentication.

Which procedure should you run?

A. sp_eddremotelogin

B. xp_grant_login

C. sp_change_users_login

D. xp_instance_regwrite

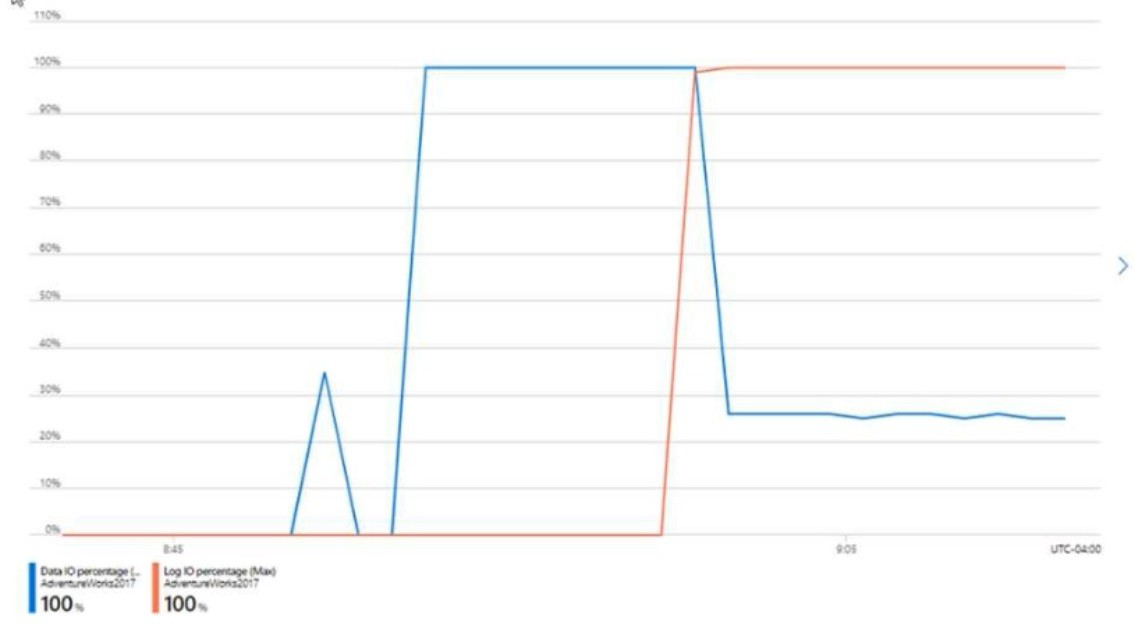

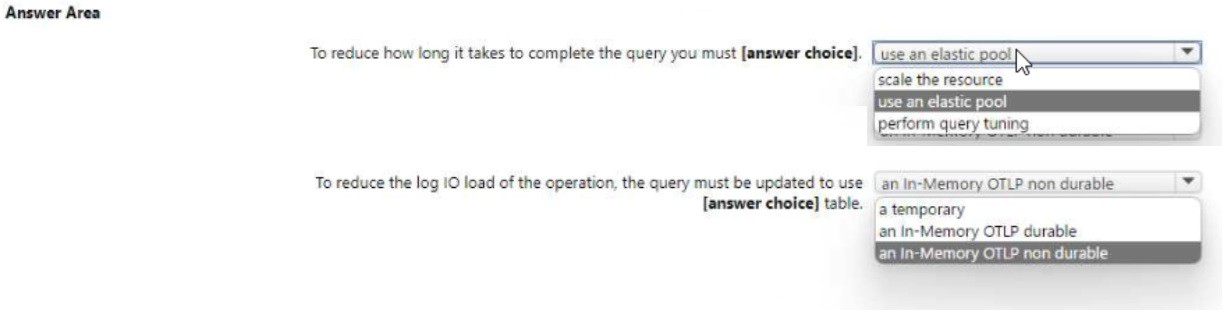

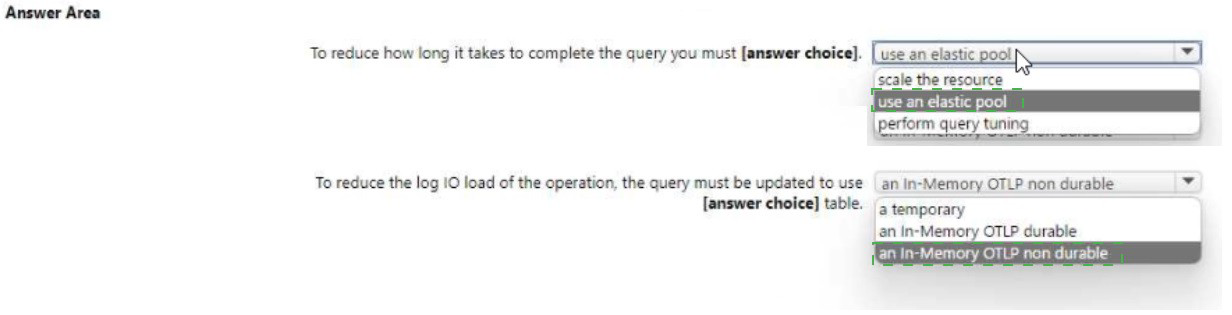

You have an Azure SQL database named DB1 that contains a table named Table 1.

You run a query to load data into Table1.

The performance metrics of Table1 during the load operation are shown in the following

exhibit.

Use the drop-down menus to select the answer choice that completes each statement

based on the information presented in the graphic. NOTE: Each correct selection is worth

one point.

You need to recommend a disaster recovery solution for an on-premises Microsoft SQL Server database. The solution must meet the following requirements:

• Support real-time data replication to a different geographic region.

• Use Azure as a disaster recovery target.

• Minimize costs and administrative effort.

What should you include in the recommendation?

A. database mirroring on an instance of SQL Server on Azure Virtual Machines

B. availability groups for SQL Server on Azure Virtual Machines

C. an Azure SQL Managed Instance link

D. transactional replication to an Azure SQL Managed Instance

You have an on-premises datacenter that contains a 14-TB Microsoft SQL Server

database.

You plan to create an Azure SQL managed instance and migrate the on-premises

database to the new instance.

Which three service tiers support the SQL managed instance? Each correct answer

presents a complete solution. NOTE: Each correct selection is worth one point.

A. General Purpose Standard

B. Business Critical Premium

C. Business Critical Memory Optimized Premium

D. General Purpose Premium

E. Business Critical Standard

C. Business Critical Memory Optimized Premium

D. General Purpose Premium

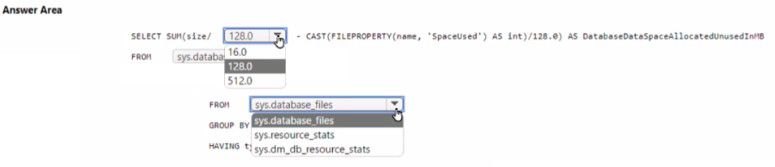

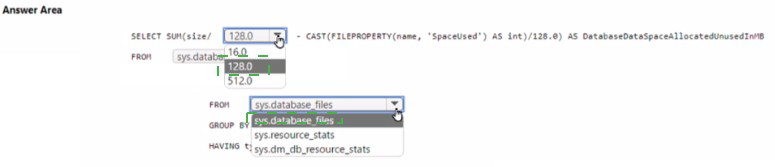

You have an Azure SQL database named D61.

You need to identify how much unused space in megabytes was allocated to DB1.

How should you complete the Transact-SQL query? To answer select the appropriate options m the answer area.

NOTE: Each correct selection is worth one point.

You have an Azure subscription.

You create a logical SQL server that hosts four databases Each database will be used by a

separate customer.

You need to ensure that each customer can access only its own database. The solution

must minimize administrative effort

Which two actions should you perform? Each correct answer presents part of the solution

NOTE: Each correct selection is worth one point.

A. Create a network security group (NSG)

B. Create a server-level firewall rule

C. Create a private endpoint

D. Create a database-level firewall rule.

E. Deny public access.

D. Create a database-level firewall rule.

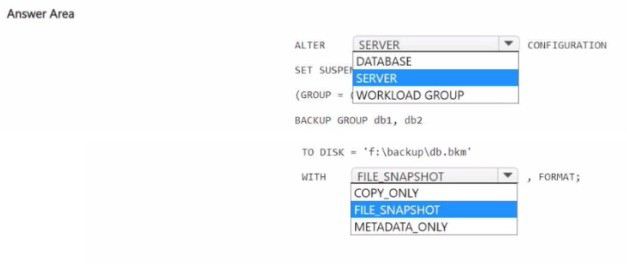

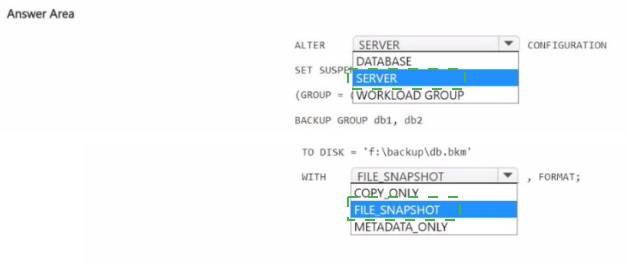

You have a SQL Server on Azure Virtual Machines instance named SQLVM1 that contains

two databases named DB1 and DB2. The database and log files for DB1 and DB2 are

hosted on managed disks.

You need to perform a snapshot backup of DB1 and DB2

How should you complete the I SQL statements? To answer, select the appropriate options

in the answer area.

NOTE: Each correct selection is worth one point.

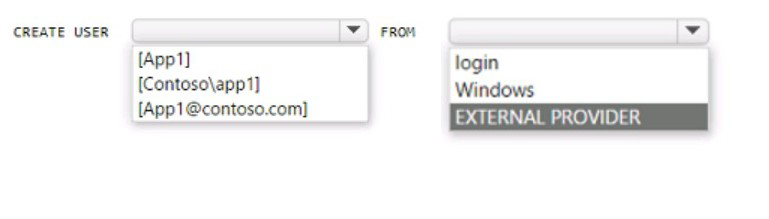

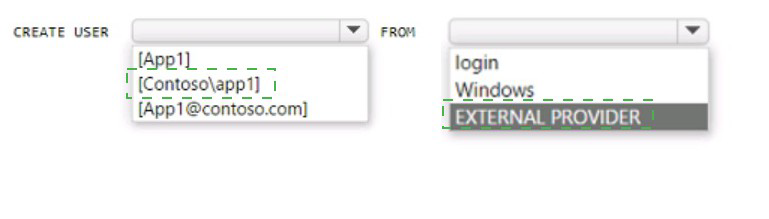

You have an Azure subscription that is linked to an Azure AD tenant named contoso.com.

The subscription contains an Azure SQL database named SQL 1 and an Azure web

named app1. App1 has the managed identity feature enabled.

You need to create a new database user for app1.

How should you complete the Transact-SQL statement? To answer, select the appropriate

options in the answer area.

NOTE: Each correct selection is worth one point.

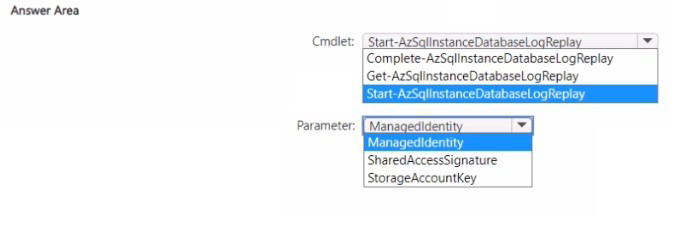

You have an Azure SQL managed instance named Server1 and an Azure Blob Storage

account named storage1 that contains Microsoft SQL Server database backup files.

You plan to use Log Replay Service to migrate the backup files from storage1 to Server1.

The solution must use the highest level of security when connecting to storage1.

| Page 9 out of 34 Pages |