Topic 6: Misc. Questions

You Save an Azure SCX database named DB1.

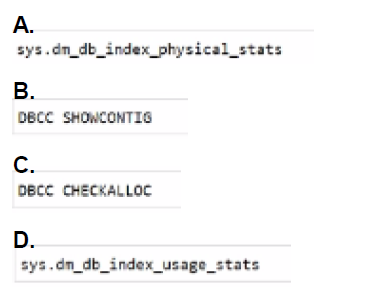

You need to query the fragmentation information of data and indexes for the tables in D61.

Which command should you run?

A. Option A

B. Option B

C. Option C

D. Option D

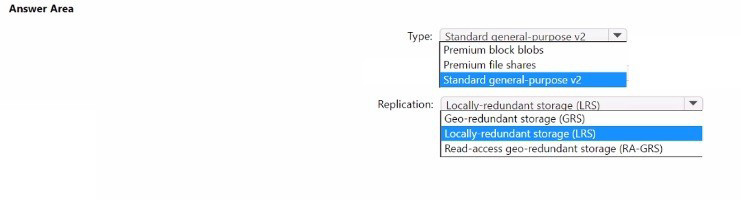

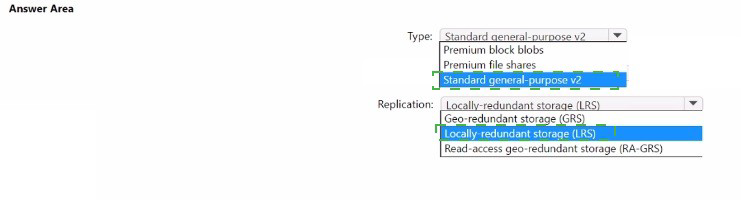

You plan to deploy an Always On failover cluster instance (FCI) on Azure virtual machines.

You need to provision an Azure Storage account to host a cloud witness for the

deployment.

How should you configure the storage account? To answer, select the appropriate options

in the answer area.

NOTE: Each correct selection is worth one point.

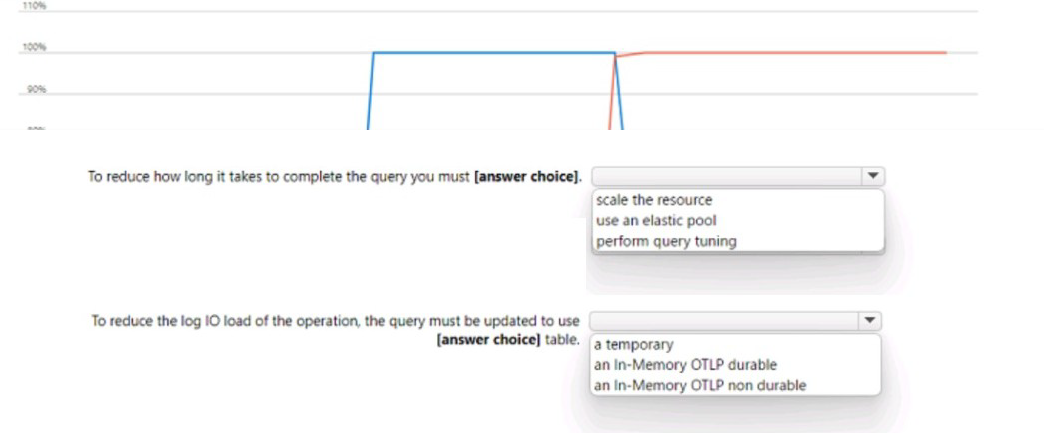

You have an Azure SQL database named that contains a table named Table1.

You run a query to bad data into Table1.

The performance Of Table1 during the load operation are shown in exhibit.

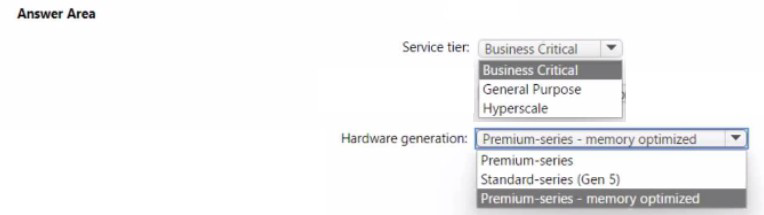

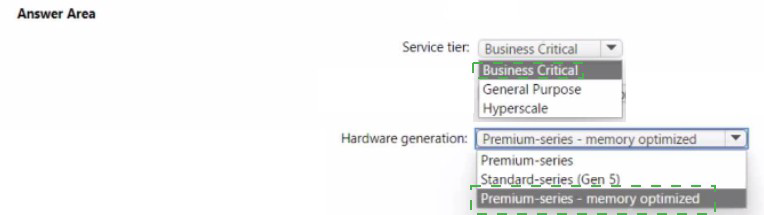

You have an Azure subscription.

You need to deploy an Azure SQL managed instance that meets the following

requirements:

• Optimize latency.

• Maximize the memory-to-vCore ratio.

Which service tier and hardware generation should you use? To answer, select the

apocopate options in the answer area.

NOTE: Each correct selection is worth one point.

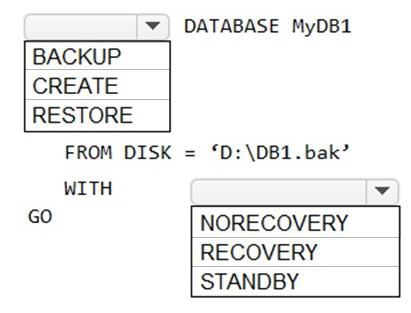

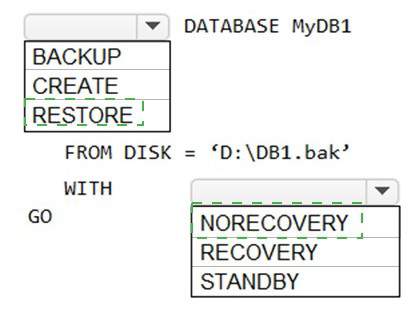

You have two Azure virtual machines named VM1 and VM2 that run Windows Server 2019.

VM1 and VM2 each host a default Microsoft SQL Server 2019 instance. VM1 contains a

database named DB1 that is backed up to a file named D:\DB1.bak.

You plan to deploy an Always On availability group that will have the following

configurations:

VM1 will host the primary replica of DB1.

VM2 will host a secondary replica of DB1.

You need to prepare the secondary database on VM2 for the availability group.

How should you complete the Transact-SQL statement? To answer, select the appropriate

options in the answer area.

You have an Azure subscription.

Vou need to deploy two instances of SQL Server on Azure virtual machines in a highly

available configuration that will use an Always On availability group. The solution must

meet the following requirements:

• Minimize how long it takes to fail over.

• Maintain existing connections to the primary replica during a failover.

What should you do?

A. Connect each virtual machine to a single subnet on a single virtual network.

B. Connect each virtual machine to a single subnet on a virtual network. Deploy a standard Azure load balancer.

C. Connect each virtual machine to a different subnet on a single virtual network.

D. Connect each virtual machine to a different subnet on a virtual network. Deploy a basic Azure load balancer.

You have two Azure virtual machines named Server 1 and Server2 that run Windows

Server 2022 and are joined to an Active Directory Domain Services (AD DS) domain

named contoso.com.

Both virtual machines have a default instance of Microsoft SQL Server 2019 installed.

Server1 is configured as a master server, and Server2 is configured as a target server.

On Server1, you create a proxy account named contoso\sqlproxy.

You need to ensure that the SQL Server Agent job steps can be downloaded from Server1

and run on Server2.

Which two actions should you perform? Each correct answer presents part of the solution.

NOTE: Each correct selection is worth one point.

A. On Server2, grant the contoso\sqlproxy account the Impersonate a client after authentication user right.

B. On Server2, grant the contoso\sqlproxy account the Access this computer from the network user right.

C. On Server2. create a proxy account.

D. On Server1. set the AllowDownloadedDobsTonatehProxyName registry entry to 1.

E. On Server2. set the AllowDownloadedJobsToNatchProxyName registry entry to 1.

E. On Server2. set the AllowDownloadedJobsToNatchProxyName registry entry to 1.

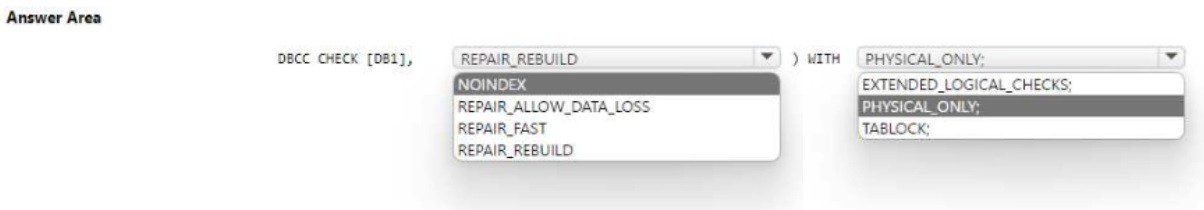

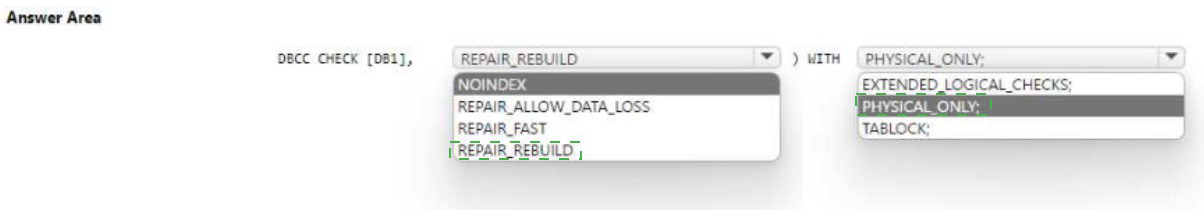

You have a SQL Server on Azure Virtual Machines instance that hosts a 10-TB SQL

database named DB1.

You need to identify and repair any physical or logical corruption in DB1. The solution must

meet the following requirements:

• Minimize how long it takes to complete the procedure.

• Minimize data loss.

How should you complete the command? To answer, select the appropriate options in the

answer area NOTE: Each correct selection is worth one point.

You have an Azure subscription that contains a SQL Server on Azure Virtual Machines

instance named SQLVM1. SQLVM1 has the following configurations:

• Automated patching is enabled.

• The SQL Server laaS Agent extension is installed.

• The Microsoft SQL Server instance on SQLVM1 is managed by using the Azuie portal.

You need to automate the deployment of cumulative updates to SQLVM1 by using Azure

Update Manager. The solution must ensure that the SQL Server instance on SQLVM1 can

be managed by using the Azure portal.

What should you do first on SQLVM1?

A. Install the Azure Monitor Agent.

B. Uninstall the SQL Server laaS Agent extension.

C. Install the Log Analytics agent.

D. Set Automated patching to Disable

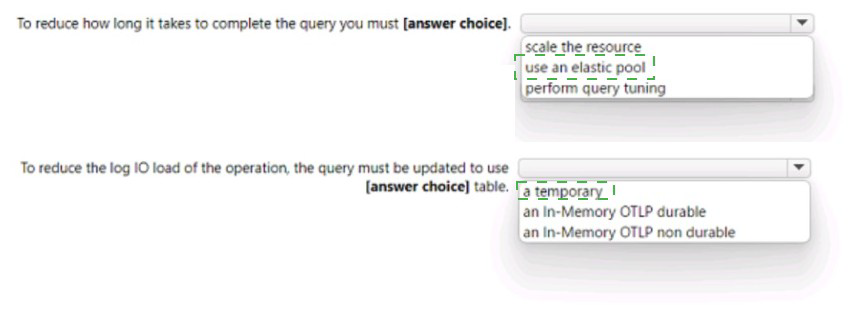

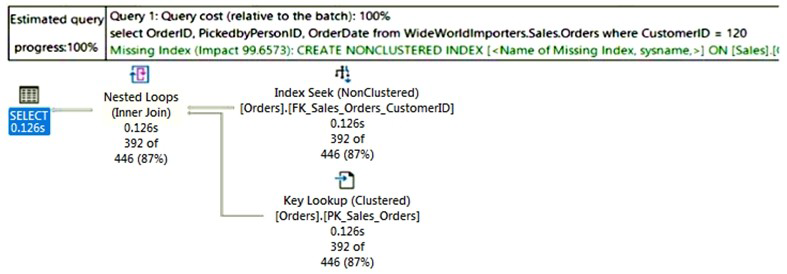

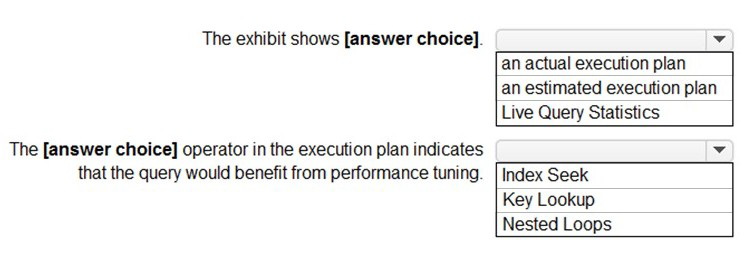

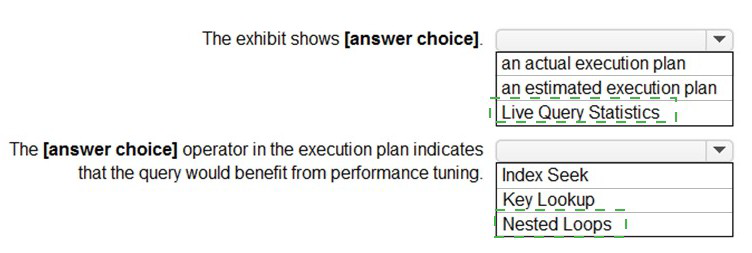

You have an Azure SQL database.

You are reviewing a slow performing query as shown in the following exhibit.

Use the drop-down menus to select the answer choice that completes each statement

based on the information presented in the graphic.

| Page 11 out of 34 Pages |