Topic 6: Misc. Questions

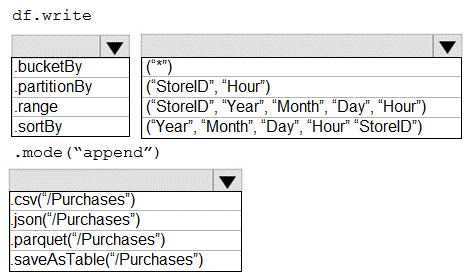

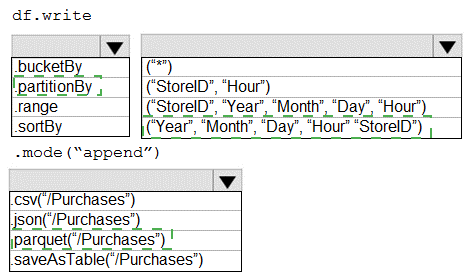

You plan to develop a dataset named Purchases by using Azure Databricks. Purchases will

contain the following columns:

ProductID

ItemPrice

LineTotal

Quantity

StoreID

Minute

Month

Hour

Year

Day

You need to store the data to support hourly incremental load pipelines that will vary for

each StoreID. The solution must minimize storage costs.

How should you complete the code? To answer, select the appropriate options in the

answer area.

NOTE: Each correct selection is worth one point.

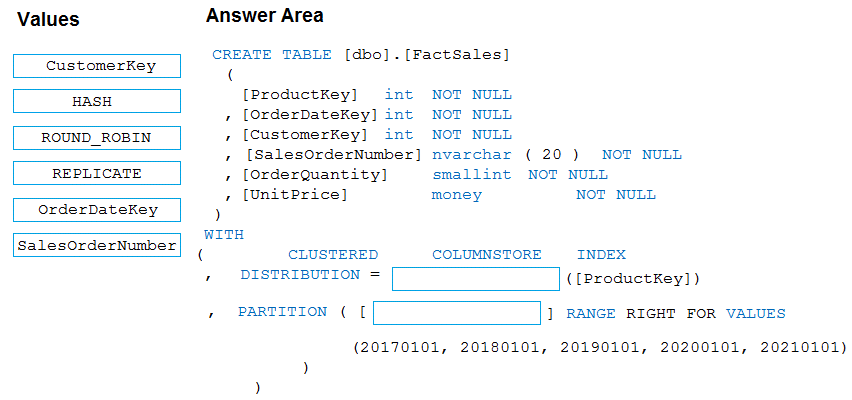

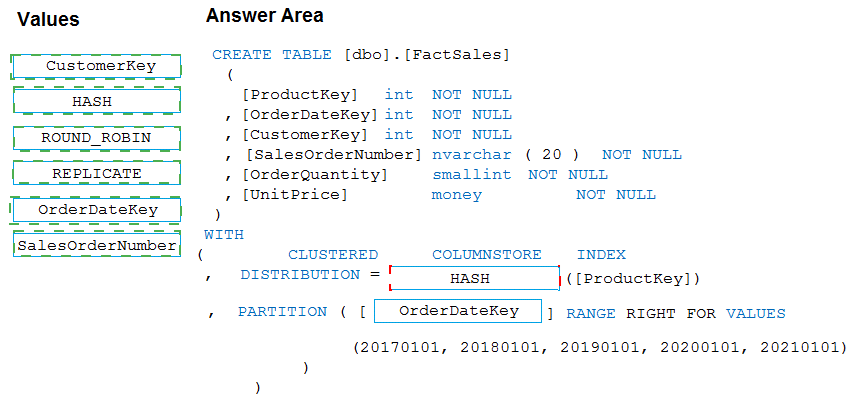

You plan to create a table in an Azure Synapse Analytics dedicated SQL pool.

Data in the table will be retained for five years. Once a year, data that is older than five

years will be deleted.

You need to ensure that the data is distributed evenly across partitions. The solutions must

minimize the amount of time required to delete old data.

How should you complete the Transact-SQL statement? To answer, drag the appropriate

values to the correct targets. Each value may be used once, more than once, or not at all.

You may need to drag the split bar between panes or scroll to view content.

NOTE: Each correct selection is worth one point.

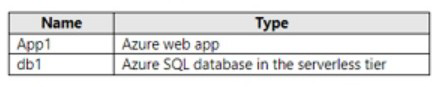

You have an Azure subscription that contains the resources shown in the following table.

App1 experiences transient connection errors and timeouts when it attempts to access db1

after extended periods of inactivity. You need to modify db1 to resolve the issues

experienced by App1 as soon as possible, without considering immediate costs. What

should you do?

A. Increase the number Of vCores allocated to db1.

B. Disable auto-pause delay for dbl.

C. Decrease the auto-pause delay for db1.

D. Enable automatic tuning for db1.

You have an Azure virtual machine named Server1 that has Microsoft SQL Server

installed. Server1 contains a database named DB1.

You have a logical SQL server named ASVR1 that contains an Azure SQL database

named ADB1.

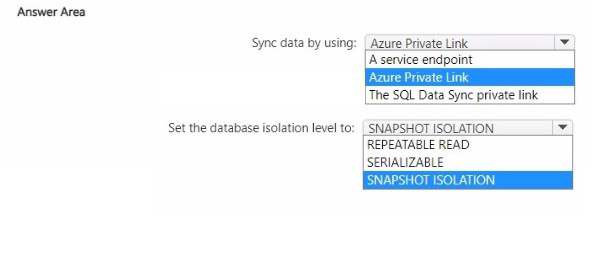

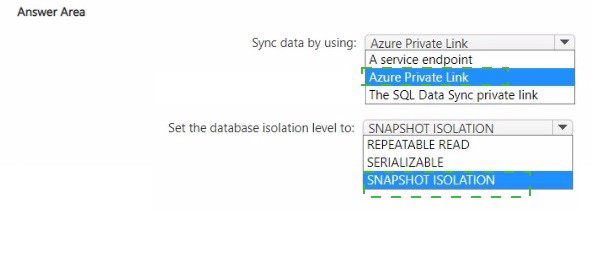

You plan to use SQL Data Sync to migrate DB1 from Server! to ASVR1.

You need to prepare the environment for the migration. The solution must ensure that the

connection from Server1 to ADB1 does NOT use a public endpoint.

What should you do? To answer, select the appropriate options in the answer area.

NOTE: Each correct selection is worth one point.

You have an Azure SQL database named DB1.

You need to encrypt DB1. The solution must meet the following requirements;

• Encrypt data in motion.

• Support comparison operators.

• Provide randomized encryption.

What should you include in the solution?

A. Always Encrypted

B. column-level encryption

C. Transparent Data Encryption (TDE)

D. Always Encrypted with secure enclaves

You have an on-premises datacenter that contains a 2-TB Microsoft SQL Server 2019

database named DB1.

You need to recommend a solution to migrate DB1 to an Azure SQL managed instance.

The solution must minimize downtime and administrative effort.

What should you include in the recommendation?

A. Log Replay Service (LRS)

B. log shipping

C. transactional replication

D. SQL Data Sync

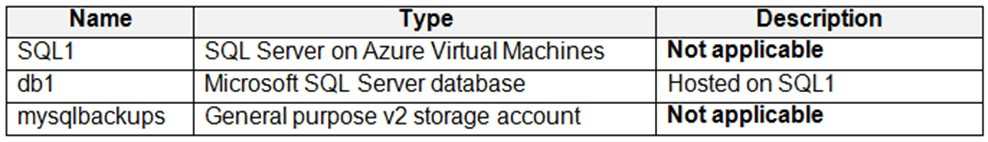

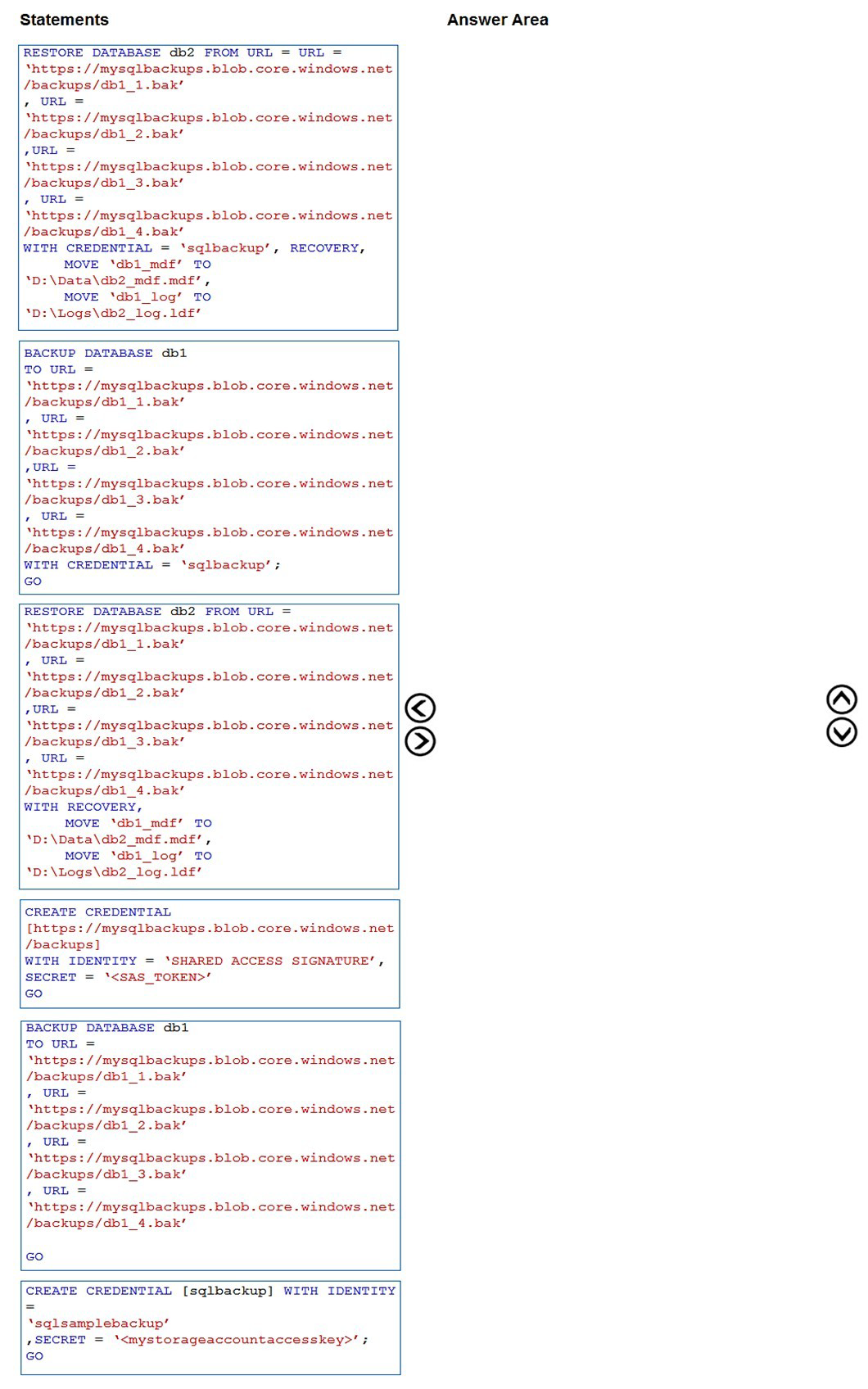

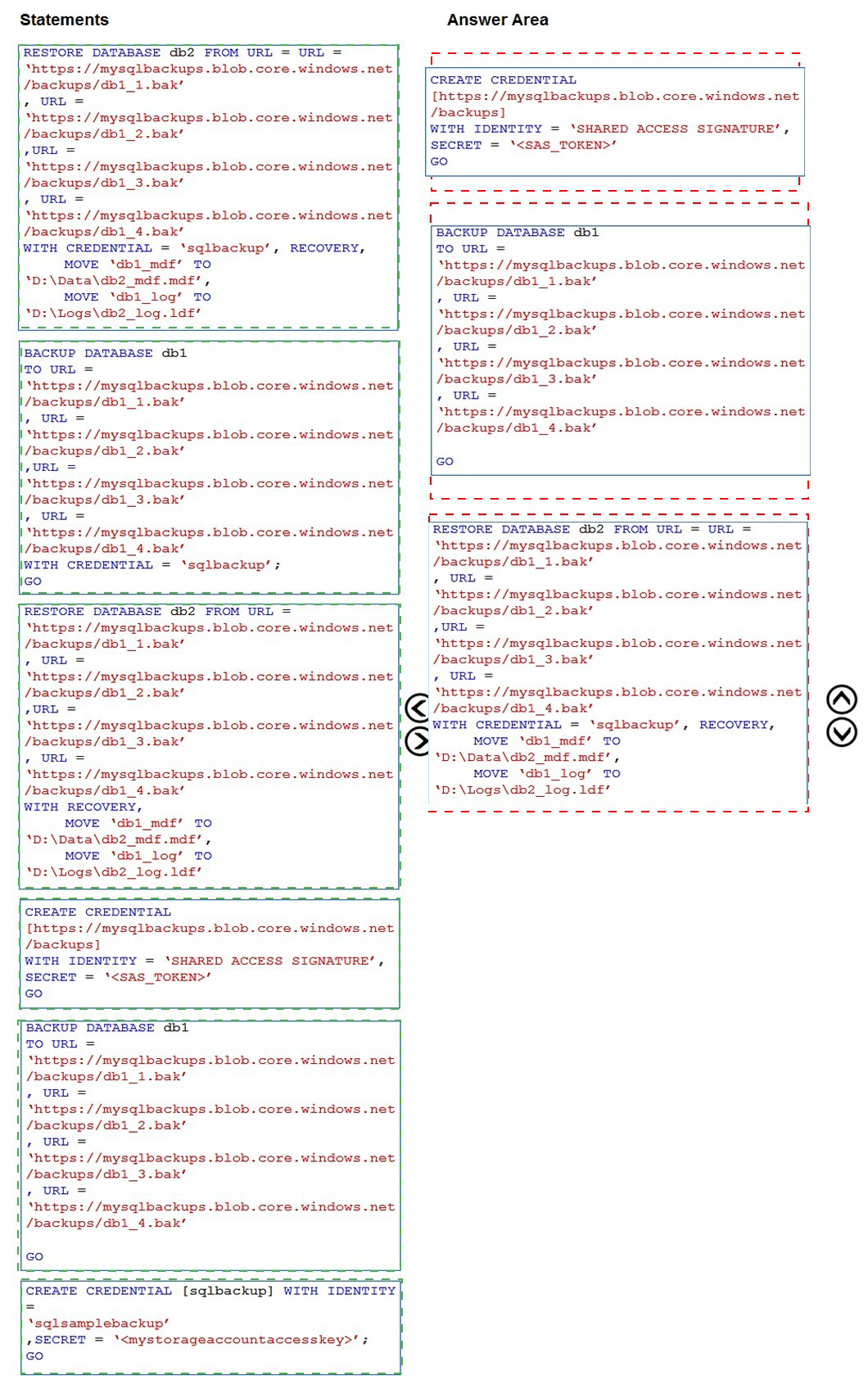

You have an Azure subscription that contains the resources shown in the following table.

You need to back up db1 to mysqlbackups, and then restore the backup to a new database

named db2 that is hosted on SQL1. The solution must ensure that db1 is backed up to a

stripe set.

Which three Transact-SQL statements should you execute in sequence? To answer, move

the appropriate statements from the list of statements to the answer area and arrange them

in the correct order.

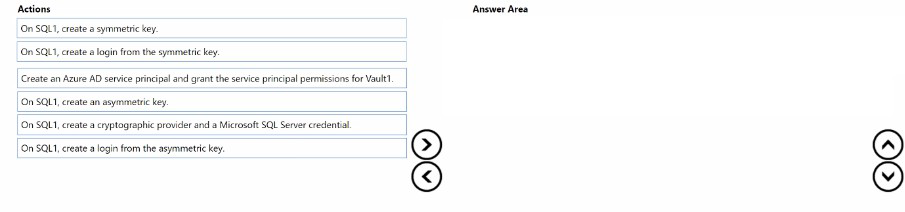

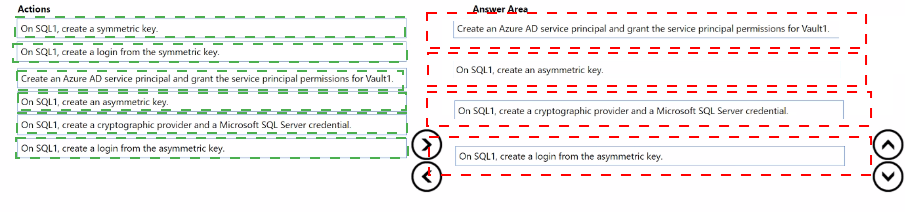

You have an Azure key vault named Vault1 and a SQL Server on Azure Virtual Machines

instance named SQL1 SQL 1 hosts a database named DB1.

You need to configure Transparent Data Encryption (TDE) on D61 to use a key in Vault1

Which four actions should you perform in sequence' To answer, move the appropriate actions from the list of actions to the answer area and arrange them in the correct order.

You have an on-premises Microsoft SQL Server 2022 instance that hosts a 60-TB

production database named DB1.

You plan to migrate DB1 to Azure.

You need to recommend a hosting solution for DB1.

Which Azure SQL Database service tier should you use to host DB1?

A. Hyperscale

B. Business Critical

C. General Purpose

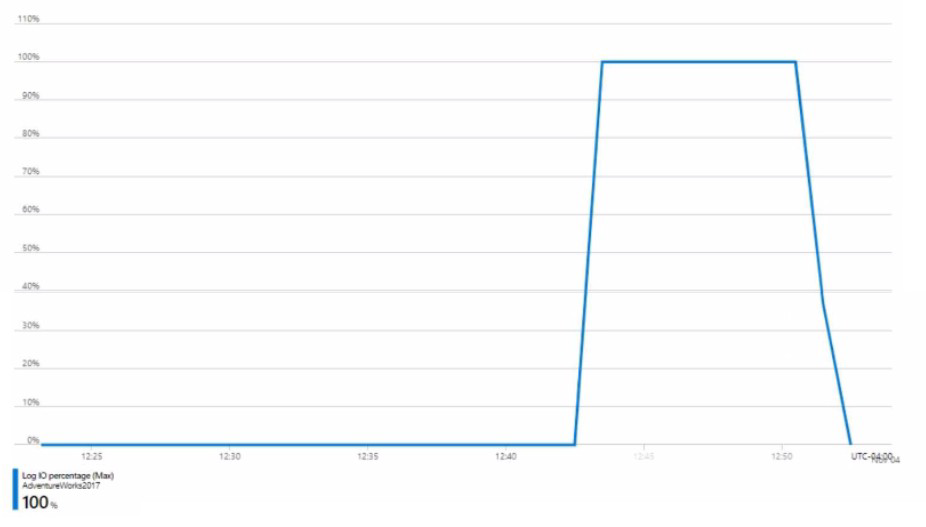

You have an Azure SQL database named DB1 in the General Purpose service tier.

The performance metrics for DB1 are shown in the following exhibit.

You need to reduce the Log 10 percentage. The solution must minimize costs.

What should you do?

A. Increase the number of vCores.

B. Change Recovery model to Simple.

C. Perform a checkpoint operation.

D. Change Service tier to Business Critical.

| Page 10 out of 34 Pages |