Topic 5: Misc. Questions

You need to implement the Azure RBAC role assignment. The solution must meet the authentication and authorization requirements.

How many assignment should you configure for the Network Contributor role for Role1? To answer, select appropriate in the answer area.

You have an Azure Active Directory (Azure AD) tenant that syncs with an on-premises Active Directory domain.

Your company has a line-of-business (LOB) application that was developed internally.

You need to implement. SAML single sign-on (SSO) and enforce multi-factor authentication (MFA) when users attempt to access the application from an unknown location.

Which two features should you include in the solution? Each correct answer presents part of the solution. NOTE: Each correct selection is worth one point.

A. Azure AD enterprise applications

B. Azure AD Identity Protection

C. Azure Application Gateway

D. Conditional Access policies

E. Azure AD Privileged Identity Management (PIM)

D. Conditional Access policies

Explanation:

The goal is to secure an internally developed LOB application. The SAML SSO standard requires configuring the application in Azure AD. Once configured, controlling how users access it (e.g., requiring MFA from unknown locations) requires defining and enforcing policies based on user risk, device state, or location.

Correct Options:

A. Azure AD enterprise applications

This is the foundational component for configuring SAML-based SSO for any application. You register your LOB app as an Enterprise Application in Azure AD, configure its SAML settings (identifier, reply URL, claims), and assign users. This enables the authentication handshake.

D. Conditional Access policies

Conditional Access is the policy engine that enforces access controls like MFA based on signals. You create a policy that targets the specific Enterprise Application (the LOB app) and sets a condition for "untrusted network locations." The policy's grant control would then require "Multi-factor authentication."

Incorrect Options:

B. Azure AD Identity Protection

While Identity Protection can detect risky sign-ins and user risk, it is primarily a detection and automated remediation tool. It is not the direct method to enforce MFA based on a specific, defined condition like "unknown location." Conditional Access policies are the correct control plane that can consume Identity Protection risk signals.

C. Azure Application Gateway

This is a Layer 7 load balancer/web application firewall (WAF). It operates at the network/application delivery tier and is not responsible for configuring SAML SSO or enforcing identity-based MFA policies. It can integrate with Azure AD for authentication, but the core SSO and policy configuration happens in Azure AD.

E. Azure AD Privileged Identity Management (PIM)

PIM is used for Just-In-Time (JIT) and time-bound elevation of administrator privileges to Azure AD or Azure resources. It is unrelated to enforcing MFA for regular users accessing a standard LOB application.

Reference:

Microsoft Learn: Plan a Conditional Access deployment - Core documentation on using Conditional Access policies.

Microsoft Learn: Tutorial: Configure SAML-based single sign-on (SSO) for an application in Azure Active Directory - Shows how to configure SSO in Enterprise Applications.

You have an on-premises network to which you deploy a virtual appliance.

You plan to deploy several Azure virtual machines and connect the on-premises network to Azure by using a Site-to-Site connection.

All network traffic that will be directed from the Azure virtual machines to a specific subnet must flow through the virtual appliance.

You need to recommend solutions to manage network traffic.

Which two options should you recommend? Each correct answer presents a complete solution.

A. Configure Azure Traffic Manager.

B. Implement an Azure virtual network

C. Implement Azure ExpressRoute.

D. Configure a routing table.

D. Configure a routing table.

Explanation:

The goal is to force traffic from Azure VMs to a specific on-premises subnet through a virtual appliance (a network virtual appliance, or NVA). This requires a reliable, private connection to Azure and the ability to override Azure's default system routing. A Site-to-Site VPN is already planned.

Correct Options:

D. Configure a routing table.

This is the mandatory component for traffic steering. You create a User-Defined Route (UDR) table, associate it with the subnet containing the Azure VMs, and add a route. This route specifies the destination on-premises subnet and sets the next hop as the private IP address of the virtual appliance, forcing all traffic to that destination through the NVA.

C. Implement Azure ExpressRoute.

While the scenario plans to use a Site-to-Site VPN, ExpressRoute presents a valid alternative complete solution as required by the question. ExpressRoute provides a private, high-bandwidth connection and, crucially, uses BGP to propagate routes from on-premises to Azure. You can advertise the specific on-premises subnet's route with the NVA as the next hop via BGP, achieving the same forced tunneling result.

Incorrect Options:

A. Configure Azure Traffic Manager.

Traffic Manager is a DNS-based global load balancer. It works at the DNS layer to direct user traffic to the closest/healthiest endpoint (web apps, public IPs). It has no capability to control routing of internal network traffic between Azure VMs and an on-premises subnet within a virtual network.

B. Implement an Azure virtual network.

While an Azure Virtual Network (VNet) is a foundational prerequisite for deploying Azure VMs and connecting on-premises networks, it is not a complete solution by itself. A VNet provides the environment but does not contain the mechanism to override default routing and force traffic through a specific appliance. This option is necessary but insufficient.

Reference:

Microsoft Learn: Virtual network traffic routing - Explains system routes and how User-Defined Routes (UDRs) override them.

Microsoft Learn: What is Azure ExpressRoute? - Details how ExpressRoute uses BGP for routing, enabling next-hop manipulation.

You have an Azure App Service web app named Webapp1 that connects to an Azure SQL database named DB1. Webapp1 and DB1 are deployed to the East US Azure region.

You need to ensure that all the traffic between Weoapp1 and DB1 is sent via a private connection.

What should you do? To answer, select the appropriate options in the answer area.

NOTE: Each correct selection is worth one point.

Explanation:

To ensure all traffic between Webapp1 (App Service) and DB1 (SQL Database) is private and stays on the Azure backbone network, you must isolate them from the public internet. This is achieved by deploying them inside an Azure Virtual Network (VNet) and using Private Endpoints. A Private Endpoint provides a private IP address from your VNet to the Azure service (SQL DB), making it accessible as if it were a local resource within the network.

Correct Options:

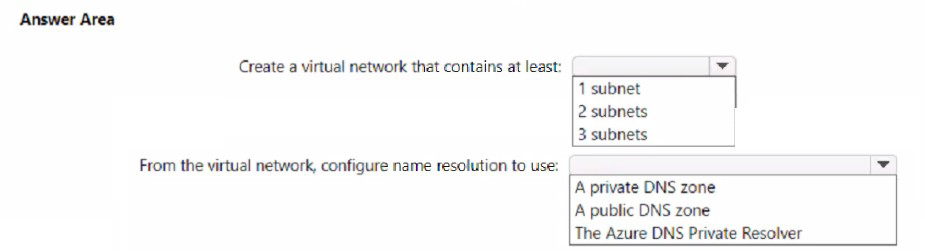

Create a virtual network that contains at least: 2 subnets

You need one subnet for the App Service to integrate into (using VNet Integration) and a separate, dedicated subnet to deploy the Private Endpoint for the SQL Database. Placing the Private Endpoint in its own subnet is a security and management best practice.

From the virtual network, configure name resolution to use: A private DNS zone

When you create a Private Endpoint for Azure SQL Database, it creates a Fully Qualified Domain Name (FQDN) like db1.database.windows.net. To ensure this name resolves to the private IP address of the Private Endpoint (instead of the public IP), you must link a dedicated Azure Private DNS zone (privatelink.database.windows.net) to your VNet.

Incorrect Options:

1 subnet / 3 subnets:

One subnet is insufficient for the recommended two-tier architecture. Three subnets are not required for this core scenario, though they could be used for other reasons.

A public DNS zone:

This would resolve names to public IP addresses, defeating the purpose of the private connection.

The Azure DNS Private Resolver:

While the Private Resolver is used for hybrid DNS resolution (e.g., to resolve on-premises names from Azure), it is not the correct service for resolving the private FQDN of an Azure PaaS service's Private Endpoint. That is the specific role of an Azure Private DNS zone.

Reference:

Microsoft Learn: What is Azure Private Endpoint?

Microsoft Learn: Integrate your app with an Azure virtual network

Microsoft Learn: Azure Private Endpoint DNS configuration - Specifically covers the required use of Private DNS zones.

You deploy two instances of an Azure web app. One instance is in the East US Azure region and the other instance is in the West US Azure region. The web app uses Azure Blob storage to deliver large files to end users.

You need to recommend a solution for delivering the files to the users. The solution must meet the following requirements:

Ensure that the users receive files from the same region as the web app that they access.

Ensure that the files only need to be updated once.

Minimize costs.

What should you include in the recommendation?

A. Azure File Sync

B. Distributed File System (DFS)

C. read-access geo-redundant storage (RA-GRS)

D. geo-redundant storage (GRS)

Explanation:

The goal is to serve static files (blobs) from a storage account to users in two different regions, ensuring low latency by serving from the user's local region. The files must be globally consistent ("updated once") and the solution must be cost-effective. This describes the primary use case for geo-replicated storage with read access to the secondary region.

Correct Option:

C. Read-access geo-redundant storage (RA-GRS)

Meets all requirements:

RA-GRS replicates blob data asynchronously from a primary region (e.g., East US) to a secondary region (e.g., West US). The key feature is read access to the secondary region.

Local Delivery:

You can configure your West US web app to read files from the secondary endpoint of the storage account, ensuring users in West US get files from their local, secondary region copy.

Update Once:

You upload/update files only to the primary region endpoint. The replication to the secondary happens automatically, meeting the "update once" requirement.

Cost Minimization:

RA-GRS is a built-in, platform-managed replication solution. It is far more cost-effective than managing manual sync (Azure File Sync) or a custom DFS solution, and only incurs the standard geo-replication cost plus minimal data egress if reading from the secondary.

Incorrect Options:

A. Azure File Sync:

This is for syncing file shares to on-premises Windows Servers or between regions. It is over-engineered for this blob storage scenario, requires managing sync servers, and introduces higher cost and complexity than a native storage redundancy option.

B. Distributed File System (DFS):

This is an on-premises Windows Server technology for namespace aggregation and replication. It is not a native Azure PaaS solution, would require IaaS VMs to host, and incurs significant administrative overhead and cost, failing the "minimize costs" goal.

D. Geo-redundant storage (GRS):

GRS replicates data to a secondary region but does not allow read access to it. The secondary is for disaster recovery only. This would force the West US web app to read from the East US primary endpoint, increasing latency and potentially egress costs, thus failing the "users receive files from the same region" requirement.

Reference (Conceptual Alignment with AZ-305 Skills Outline):

This question tests the "Design a solution for backup and recovery" and "Design for high availability" objectives. Specifically, it evaluates knowledge of Azure Storage redundancy options (RA-GRS vs. GRS) and selecting the correct service for a globally distributed read-heavy workload with static content.

You need to recommend a solution for the App1 maintenance task. The solution must minimize costs.

What should you include in the recommendation?

A. an Azure logic app

B. an Azure function

C. an Azure virtual machine

D. an App Service WebJob

D. an App Service WebJob

Explanation:

For an automated, scheduled maintenance task (like database cleanup, sending reports, processing queues), the most cost-effective solution is typically a serverless or event-driven compute option. You only pay for execution time, avoiding the 24/7 compute cost of a VM. The choice often depends on the runtime and specific integration needs of "App1."

Analysis of Options (Assuming Cost is the Primary Driver):

B. an Azure function and D. an App Service WebJob are strong candidates for minimizing cost.

Azure Functions:

(Serverless) are extremely cost-effective for short-running, scheduled tasks (using a Timer trigger). You pay only for the seconds of execution and a small number of executions per month are often free.

App Service WebJob:

If App1 is already running in an App Service plan, adding a WebJob is near-zero incremental cost, as it runs on the same already-paid-for compute instances. It's a very cost-minimal choice in that context.

A. an Azure logic app could also be cost-effective for workflow-based tasks, billed per execution.

C. an Azure virtual machine is typically the highest-cost option for a simple maintenance task. You pay for the VM continuously (compute, OS license, storage) even when the task is not running, which directly contradicts "minimize costs."

Conclusion:

Given the standard Azure cost optimization principles tested in AZ-305, the answer C. an Azure virtual machine is almost certainly incorrect for this scenario. The correct recommendation to minimize costs would be one of the other options, likely B. an Azure function or D. an App Service WebJob, depending on the specific context of App1 not provided in the snippet.

Please double-check the provided question and answer. If the official answer is indeed VM, there must be critical context missing from the snippet—such as the task requiring very long runtime (>10 mins), specific software only available on a custom VM image, or the business already having committed spend on a VM used for other purposes. Without that context, VM is the worst choice for cost minimization.

You have an Azure subscription. The subscription contains a tiered app named App1 that is distributed across multiple containers hosted in Azure Container Instances.

You need to deploy an Azure Monitor monitoring solution for App1. The solution must meet the following requirements:

• Support using synthetic transaction monitoring to monitor traffic between the App1 components.

• Minimize development effort.

What should you include in the solution?

A. Network Insights

B. Application Insights

C. Container insights

D. Log Analytics Workspace Insights

Explanation:

The app is containerized (ACI), but the primary requirement is to monitor traffic between application components using synthetic transactions (availability tests/URL pings). This is a classic application performance monitoring (APM) task focused on the application layer and its transactions, not the underlying container or network infrastructure.

Correct Option:

B. Application Insights

Synthetic Transaction Monitoring:

Application Insights has a built-in feature called Availability Tests (URL ping or multi-step web test). You can configure these tests to simulate user traffic between your application's endpoints (components), verifying connectivity and response times.

Minimize Development Effort:

Enabling Application Insights typically requires minimal code change (adding an SDK/instrumentation key) or can be enabled agentlessly for supported platforms. The synthetic tests are configured through the Azure portal, not code.

Holistic App Monitoring:

While Container Insights would monitor the ACI infrastructure (CPU/memory), Application Insights directly monitors the application's behavior, performance, and dependencies, which aligns with monitoring "traffic between components."

Incorrect Options:

A. Network Insights:

This is a monitoring workbook for Azure Networking resources (VNets, VPN Gateways, Application Gateways). It provides network-level health and metrics, not application-layer synthetic transactions or monitoring of traffic between application components in containers.

C. Container Insights:

This is the correct tool for monitoring the performance of the container hosts and workloads (e.g., ACI container groups). It collects metrics for CPU, memory, and logs. However, it does not have a native feature for creating synthetic transactions to monitor traffic flow between application tiers/components.

D. Log Analytics Workspace Insights:

This is not a standalone Azure Monitor solution. "Insights" solutions (like Container Insights, VM Insights) store their data in a Log Analytics workspace. This option is a distractor; the workspace itself is a data store, not a monitoring solution with specific features like synthetic transaction testing.

Reference (Conceptual Alignment with AZ-305 Skills Outline):

This question tests the "Design a solution for logging and monitoring" objective. Specifically, it evaluates the selection of Application Insights for monitoring application availability, performance, and dependencies—including via synthetic transactions—which is a core capability distinct from infrastructure monitoring tools.

You have 100 servers that run Windows Server 2012 R2 and host Microsoft SQL Server 2012 R2 instances. The instances host databases that have the following characteristics:

The largest database is currently 3 TB. None of the databases will ever exceed 4 TB.

Stored procedures are implemented by using CLR. You plan to move all the data from SQL Server to Azure. You need to recommend an Azure service to host the databases. The solution must meet the following requirements:

Whenever possible, minimize management overhead for the migrated databases. Minimize the number of database changes required to facilitate the migration. Ensure that users can authenticate by using their Active Directory credentials.

What should you include in the recommendation?

A. Azure SQL Database single databases

B. Azure SQL Database Managed Instance

C. Azure SQL Database elastic pools

D. SQL Server 2016 on Azure virtual machines

Explanation:

The requirements strongly favor a managed PaaS service ("minimize management overhead"). However, the legacy characteristics (SQL Server 2012 R2, CLR stored procedures) and the need for Active Directory authentication rule out the standard single Azure SQL Database. This leaves Managed Instance as the "lift-and-shift" friendly PaaS option that supports these legacy features without requiring a VM.

Correct Option:

B. Azure SQL Database Managed Instance

Minimizes Management Overhead:

As a fully managed PaaS service, Microsoft handles patching, backups, and high availability, meeting the primary requirement.

Minimizes Database Changes:

Managed Instance is designed for near-100% compatibility with on-premises SQL Server. It natively supports SQL CLR and Windows Authentication/Active Directory authentication (via Azure AD), meaning the stored procedures and authentication method require minimal to no changes.

Fits Size Requirement:

Supports databases up to 16 TB, well above the 4 TB limit.

Supports All Requirements:

It is the only PaaS option that satisfies all three constraints simultaneously (managed, compatible with CLR, supports AD auth).

Incorrect Options:

A. Azure SQL Database single databases & C. Azure SQL Database elastic pools:

These are the most managed PaaS options but have key limitations for this scenario. They do not support SQL CLR and have limited support for certain legacy SQL Server features. While they support Azure AD authentication, the CLR requirement makes them incompatible without significant application changes.

D. SQL Server 2016 on Azure virtual machines:

This meets the technical compatibility requirements (supports CLR and Windows AD auth via domain join) and would minimize database changes. However, it fails the primary requirement to "minimize management overhead," as you are responsible for managing the entire VM, SQL Server installation, patching, backups, and HA/DR—significantly increasing administrative effort compared to a PaaS service.

Reference (Conceptual Alignment with AZ-305 Skills Outline):

This question tests the "Design data platform solutions" and "Design a solution for migration" objectives. It specifically evaluates the selection criteria for Azure SQL deployment options, emphasizing that Azure SQL Database Managed Instance is the strategic PaaS target for lift-and-shift migrations of on-premises SQL Server instances requiring near-complete compatibility, including support for CLR and Windows Authentication.

You have 100 devices that write performance data to Azure Blob Storage.

You plan to store and analyze the performance data in an Azure SQL database.

You need to recommend a solution to continually copy the performance data to the Azure SQL database.

What should you include in the recommendation?

A. Azure Database Migration Service

B. Azure Data Box

C. Data Migration Assistant (DMA)

D. Azure Data Factory

Explanation:

The key requirement is to continually copy data from Azure Blob Storage to Azure SQL Database. This is a recurring, scheduled, or triggered data movement operation, not a one-time migration. The solution needs to be an orchestration and data integration service capable of creating pipelines with a defined schedule or event-based trigger.

Correct Option:

D. Azure Data Factory

Purpose-Built for Continual Copy:

Azure Data Factory (ADF) is Azure's primary cloud-based data integration service. You create a pipeline with a Copy Activity that sources data from Azure Blob Storage and sinks it to Azure SQL Database.

Orchestration & Scheduling:

You can schedule this pipeline to run hourly, daily, or on a custom cadence, or trigger it based on an event (e.g., new file arrival). This perfectly fulfills the "continually copy" requirement.

Managed Service:

It is a fully managed, serverless service, requiring no infrastructure to manage.

Incorrect Options:

A. Azure Database Migration Service (DMS):

This service is designed for one-time, lift-and-shift migration of entire databases from source (like SQL Server, MySQL) to target Azure database services. It is not intended for ongoing, incremental copying of data from blob storage to a SQL database.

B. Azure Data Box:

This is a physical appliance for offline, one-time bulk data transfer into Azure when network upload is impractical. It is completely unsuitable for a continual, automated copy process of performance data generated by 100 devices.

C. Data Migration Assistant (DMA):

This is a standalone assessment tool. Its purpose is to analyze your on-premises SQL Server databases for compatibility issues before migration to Azure SQL. It does not perform any data movement or copying.

Reference (Conceptual Alignment with AZ-305 Skills Outline):

This question tests the "Design a data integration solution" objective. It evaluates the ability to select Azure Data Factory as the orchestration service for building repeatable, scheduled data movement pipelines between Azure data stores—a core pattern for analytics and data warehousing scenarios.

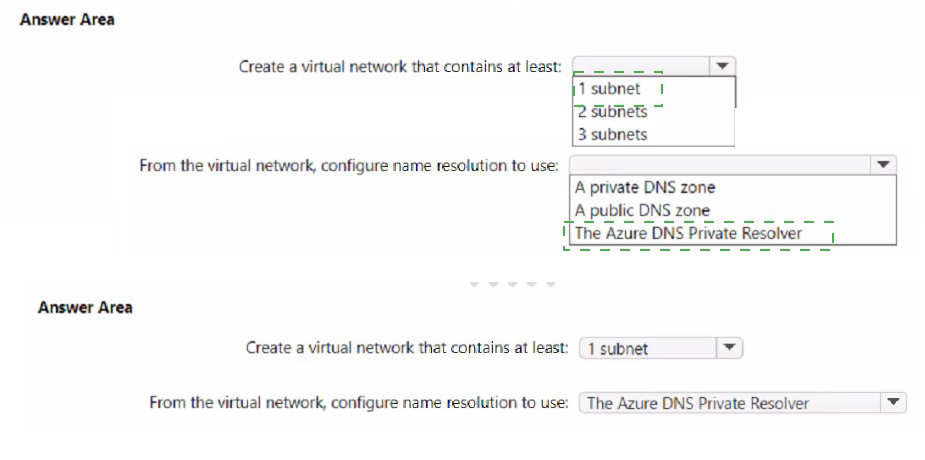

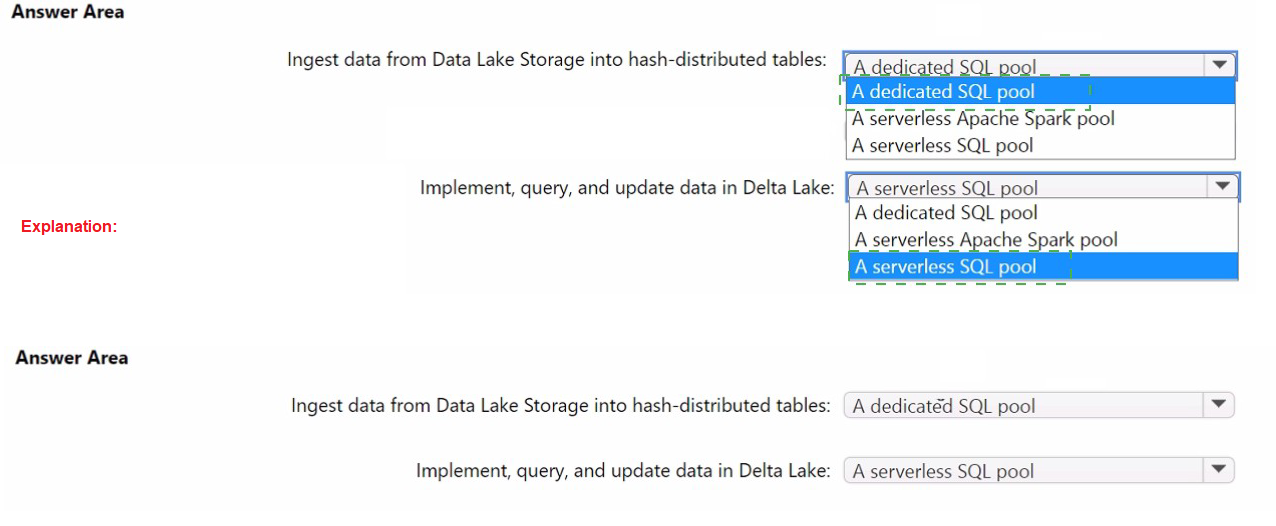

You are designing a data analytics solution that will use Azure Synapse and Azure Data Lake Storage Gen2. You need to recommend Azure Synapse pools to meet the following requirements:

• Ingest data from Data Lake Storage into hash-distributed tables.

• Implement, query, and update data in Delta Lake.

What should you recommend for each requirement? To answer, select the appropriate options in the answer area. NOTE: Each correct selection is worth one point.

Explanation:

The two requirements map to distinct Synapse pools based on their optimized workloads.

Hash-distributed tables are a core feature of dedicated SQL pools (formerly SQL DW), designed for large-scale, structured data warehousing with high-performance queries using Massively Parallel Processing (MPP).

Delta Lake operations (CREATE, UPDATE, MERGE) require the transactional engine that writes to the Delta transaction log. In Synapse, this is natively provided by Spark pools, as Delta Lake is inherently part of the Apache Spark ecosystem.

Correct Options:

Ingest data from Data Lake Storage into hash-distributed tables:

A dedicated SQL pool

Hash-distributed tables are a defining feature of dedicated SQL pools (the data warehouse). The CREATE TABLE ... WITH (DISTRIBUTION = HASH) syntax and the PolyBase or COPY INTO commands used to ingest from Data Lake Storage are executed within this MPP engine.

Implement, query, and update data in Delta Lake:

A serverless Apache Spark pool

While you can query Delta Lake tables with both serverless SQL pools and Spark pools, the requirement to implement and update (i.e., perform DML operations like INSERT, UPDATE, MERGE) requires the Apache Spark engine. Spark pools provide the native transactional support for writing to Delta Lake tables.

Why the Other Options are Incorrect:

For Ingestion into Hash-Distributed Tables:

Serverless SQL pool / Spark pool:

These cannot create or manage permanent hash-distributed tables. Serverless SQL is for ad-hoc querying; Spark pools use Spark DataFrames/RDDs, not Synapse hash distribution.

For Implementing & Updating Delta Lake:

Dedicated SQL pool:

It can query external Delta tables via CETAS but has very limited support for performing UPDATE/MERGE operations on Delta Lake files. It is not the primary engine for Delta Lake DML.

Serverless SQL pool:

It is excellent for querying Delta Lake tables (T-SQL over Delta). However, it is read-only for Delta Lake; you cannot perform INSERT, UPDATE, or MERGE operations on Delta tables using serverless SQL pools.

Reference (Conceptual Alignment with AZ-305 Skills Outline):

This tests the "Design a data analytics solution" objective, specifically knowledge of the different Azure Synapse Analytics compute pools (Dedicated SQL, Serverless SQL, Spark) and their appropriate use cases for data transformation, warehousing, and lakehouse patterns.

| Page 2 out of 28 Pages |